Introduction

In our last blog, we discussed the challenges around Autonomous Software Development which is totally free from human intervention. Toward the end, we shared our stance on why startups who are working on this problem are better off using generic models (like GPT-4) instead of investing in training their own Large Coding Models (LCMs). However, we also highlighted that training your own LCMs is a must for building a long-term moat in this space. In this article, we will discuss the specific areas where LCMs can play a critical role.

For startups who are trying to compete with GitHub Copilot, there are three obvious benefits to using LCMs instead of generic LLMs:

- Cost of inference: Code-cushman-001 (Chen et al. 2021) is a 12-billion parameter mode developed by OpenAI and served as the initial model for Github Copilot. They still use a much smaller model than SOTA OpenAI models like GPT-4o. Note that the GitHub Copilot subscription is priced at $10pm which is lower than ChatGPT plus subscription ($20pm).

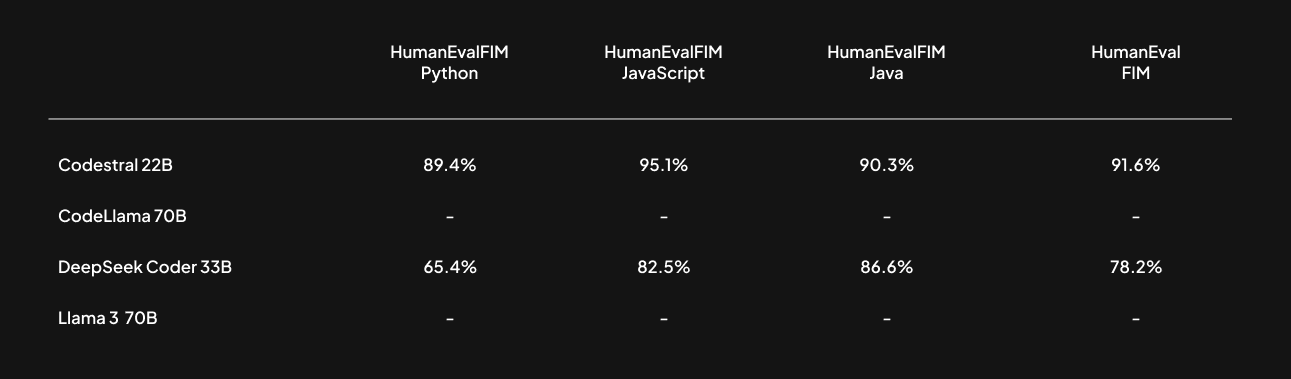

- Latency and UX: As the name suggests, Github Copilot is not meant for end-to-end development. It needs a human developer in the loop. Therefore, the responses from LLM should not have too much latency. Hence, LCMs which are as capable as LLMs but much smaller in size make a lot of sense. In fact, Codestral-22B, the latest open-weight LCM from MistralAI, exceeds Llama-3-70B (3X larger model) in coding benchmarks.

- Privacy and Reliability: Codestral v/s Llama-3 comparison proves that in terms of quality, LCMs can be at par with 3X larger LLMs. Due to the smaller size, LCMs can also be hosted locally on-device. Self-hosting on local hardware is more reliable and also more secure as your codebase is not shared with OpenAI.

But when it comes to end-to-end development, the above three advantages of LCMs are no longer valid. When you’re building systems that are so much more intelligent than a copilot that you don’t need human developers in the loop, then you no longer have to worry about cost or latency. And when the cost is out-of-the picture then you can also afford to have private models hosted with the large cloud service providers (Azure, GCP, and AWS all offer private compute environments so that your data is not getting sent to third-party servers).

Now that we have ruled out some of the misconceived advantages of LCMs over LLMs, let’s understand why we actually need LCMs?

The Hidden Case for LCMs

Github-Copilot was one of the earliest generative AI offerings that gained significant adoption. Code completion/generation is very similar to the next token prediction task which is the core skill of LLMs. However, as we go deeper into solving a real-world problem like autonomous software development, we realize that code generation is only scratching the surface – there are more layers to this problem. Even within code generation, there are multiple layers. At the risk of oversimplifying things, let’s categorize software development into seven stages depending on the complexity:

- Single-file code generation

- Writing code from scratch in a language of your choice

- Code editing (now you are locked into a given language and a corresponding stack)

- Using libraries in that language (unseen code, in known language)

- Low-resource programming language (unseen language that can be generated)

- Very low resource programming language (unseen language which is too niche)

- Cross-file code generation

- Code Debugging

The first stage is the easiest one, where your model has to generate a single-file code in a language of your choice. Let’s say the task is to create a landing page. The model can choose to use simple HTML-CSS-JS or it can choose to use a web development framework like Django/Flask in Python.

The second stage is a little challenging, where your stack is locked. Let’s say you create a landing page using the NextJS framework in Javascript. Now, for further changes, you are constrained to use the same stack and some things might be a little complicated in that particular stack. For example, Django gives you an out-of-the-box admin panel. But to build the same functionality in NextJS you may need to write a lot of code.

The third stage is where things start getting out of hand. Let’s say you want to build login functionality within your app and there is a new library that handles almost every login method. You just need to integrate the library. If you ask LLM to use a library that it has not seen in its training data, then it will most likely fail.

The fourth stage is a good-to-have capability because it deals with coding languages that are slightly less popular and therefore have less training data. As a consequence, the popular vendors don’t support these languages. For example, Google’s Gemini Code Assist does not support languages like Fortran, Cobol, and Julia. These languages are termed as Low Resource Programming Languages (LRPLs).

The fifth stage is a corner case because it tries to solve for very niche (domain-specific) languages like CUDA, UCLID5, etc. These languages are called Very Low Resource Programming Languages (VLRPLs). In the fourth stage, we could translate training data from high-resource languages to low-resource languages and then use this new data to fine-tune models. But this approach makes the overly restrictive assumption that the target low-resource language is general purpose. For example, we cannot translate arbitrary Java programs to CUDA. Therefore, VLRPLs are the most challenging category for code generation. Mora et. al. (2024) has proposed a pipeline where a high-resource programming language can be used as an intermediate representation for generating programs in VRPLs. However, this subject is too niche for the purpose of this article.

The above five stages deal with single-file code generation. The sixth stage unlocks a different dimension where we still need to do code generation but at the repository level. Lately, there has been a lot of interest in this area, and rightly so, because most of the time human developers work with code repositories rather than standalone files. Most of the researchers working in this stage are solving for repositories dealing with high-resource programming languages.

The last stage is about code debugging. LLMs are not sufficient at this stage – you need an agent that can execute code and write test cases to validate it. However, LCMs still have an important role to play here if they are trained for code debugging, also known as Automatic Program Repair.

In the later part of this blog, we will explore how LCMs can do a better job than LLMs in all the above seven scenarios. But let’s first explore if LCMs are feasible for production use cases or not.

The viable route towards LCMs

In the previous blog, we argued that Generic Models are improving at a good enough pace, and there are a lot of low-hanging fruits at the agent layer, which can help us with cross-file code generation and debugging. But if you have to build a complete replacement for human developers, you must go beyond low-hanging fruits, and therefore, LCMs are inevitable. The question is – is there a capital-efficient way to reach this end-state? The answer is yes! It is no secret that an oligopoly is emerging in the model layer, where heavily funded startups have the most capable models and they are not sharing the SOTA models and the insights with the open-source community. So startups, who are building on the application layer, cannot train their models from scratch due to a lack of capital which means a lack of access to GPUs and proprietary data. But the silver lining is – when compared to generic LLMs, the barrier to entry is not as high in LCMs. When you’re restricting the domain to code, you need less data and smaller models which entails fewer GPU training hours. Another important factor is the cost of inference. If your model is more accurate then you’ll need to do less debugging and hence less inference. Therefore, LCMs make economic sense as the cost of training will get amortized with cheaper inference.

The real risk of investing in LCMs is the progress of open-source models released by companies like Meta and MistralAI. For example, Llama-3-8B is much better than Llama-2-70B on coding benchmarks. Therefore, it is better to wait for these regular open-source releases than create your own model from scratch. One can always fine-tune these open-source models and create multiple versions of them for different domains. Apple is already doing it with generic LLMs. Their on-device models are 3B SLMs (S stands for small) with adapters trained for each feature.

If you’re solving for low-resource programming languages, then training your own LCMs becomes even more critical. This introduces new questions like what is the right Pre-trained Language Model (PLM) to get fine-tuned LCMs depending on the performance to training-time ratio? Chen et. al. 2022 show that although the multilingual PLMs have higher performance, it takes much longer to fine-tune them. The paper proposes a strategy for selecting the right programming language for the base Monolingual PLM. It improves the performance of PLMs fine-tuned on the combined multilingual dataset.

Salient techniques for training the LCMs

In the previous section, we discussed some of the economic trade-offs in training LCMs from PLMs. In this section, let’s delve deeper into the nuances of training LCMs to understand what makes them better than LLMs for code-related tasks.

Pre-training Corpus

The most obvious distinction between LCMs and LLMs lies in the mix of the pre-training corpus. The training dataset of DeepSeek-Coder is composed of 87% source code, 10% English code-related natural language corpus, and 3% code-unrelated Chinese natural language corpus. The English corpus consists of materials from GitHub’s Markdown and StackExchange, which are used to enhance the model’s understanding of code-related concepts and improve its ability to handle tasks like library usage and bug fixing. Meanwhile, the Chinese corpus consists of high-quality articles aimed at improving the model’s proficiency in understanding the Chinese language.

It is not a surprise that the pre-training corpus is code-heavy for LCMs. But there are further nuances to selecting the right code:

- Deduplication: Lee et al. (2021) have shown that language model training corpora often contain numerous near-duplicates, and the performance of LLMs can be enhanced by removing long repetitive substrings.

- Diversity: As shown in the deepseek-coder, support for less common programming languages (Bash, PHP, etc) improves substantially by inclusion in pretraining as compared to only fine-tuning as done in code-llama. Therefore, incorporating more languages in the pre-training is worth the effort.

Repository-level pre-training for Cross-file code generation

Most of the earlier LCMs were mainly pre-trained on file-level source code, which ignores the dependencies between different files in a project. However, in practical applications, such models struggle to effectively scale to handle entire project-level code scenarios. Therefore, the latest LCMs like deepseek-coder and codestral re-organize the pre-training data. They first parse the dependencies between files and then arrange these files in an order that ensures the context each file relies on is placed before that file in the input sequence. They use a graph-based algorithm for dependency analysis. To incorporate file path information, a comment indicating the file’s path is added at the beginning of each file.

Even the code-deduplication is done at the repository level of code, rather than at the file level, as the latter approach may filter out certain files within a repository, potentially disrupting the structure of the repository.

Fill-in-the-Middle training: going beyond Next Token Prediction

In the code pre-training scenario, it is often necessary to generate corresponding inserted content based on the given context and subsequent text. Due to specific dependencies in a programming language, relying solely on next token prediction is insufficient to learn this fill-in-the-middle capability. Therefore, several approaches (Bavarian et al., 2022; Li et al., 2023) propose the pre-training method of Fill-in-the-Middle (FIM). This approach involves randomly dividing the text into three parts, then shuffling the order of these parts and connecting them with special characters.

To assess the efficacy of the Fill-in-the-Middle (FIM) technique, researchers rely on the HumanEval-FIM benchmark (Fried et al., 2022). This benchmark specializes in a single-line FIM task for Python, in which one line of code from a HumanEval solution is randomly obscured, testing the model’s proficiency in predicting the missing line. StarCoder (Li et al., 2023) was one of the first LCMs that was trained for infilling capability. Later on, DeepSeek-Coder and Codestral also demonstrated infilling capability. Generic LLMs (Llama-3 70B) and even some older LCMs (CodeLlama 70B) do not have infilling capability. This can be seen in the following benchmarks released in the codestral announcement:

Task-specific code-generation

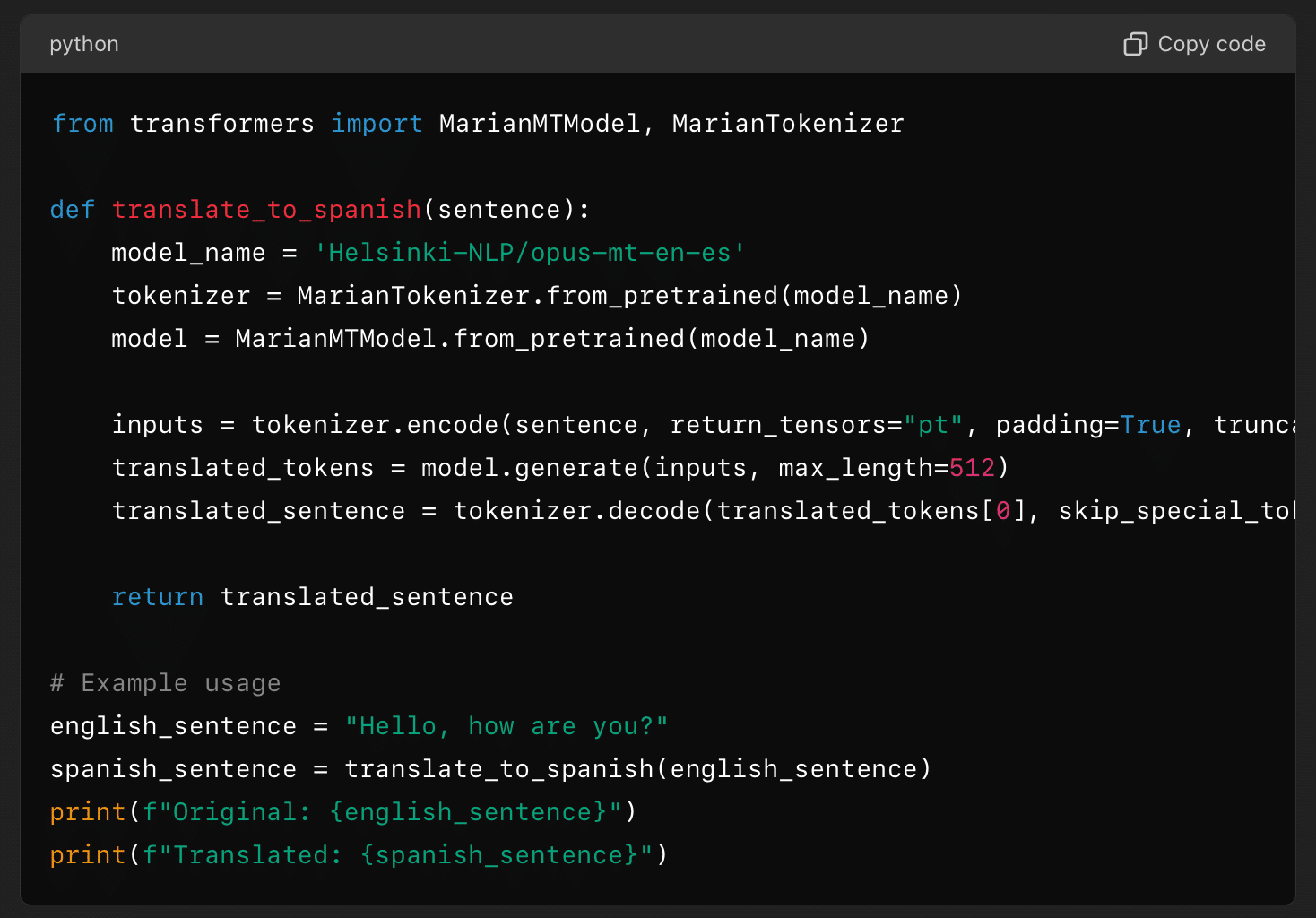

While many developers applaud the rate of progress tools like Github Copilot are making. They all resonate with one common complaint that Code-assistants are not good at using the right libraries. Instead of using libraries, they tend to write their code from scratch. The reason is quite obvious – these LCMs were not trained to work with domain-specific libraries. Let’s say we have to write a Python code to translate a sentence from English to Spanish. When we gave this prompt to GPT-4o, it gave a solution using the googletrans library. But let’s say we are already using the transformers library in our codebase. Then, we had to specifically ask GPT-4o to use the transformers library. The output produced is shown below:

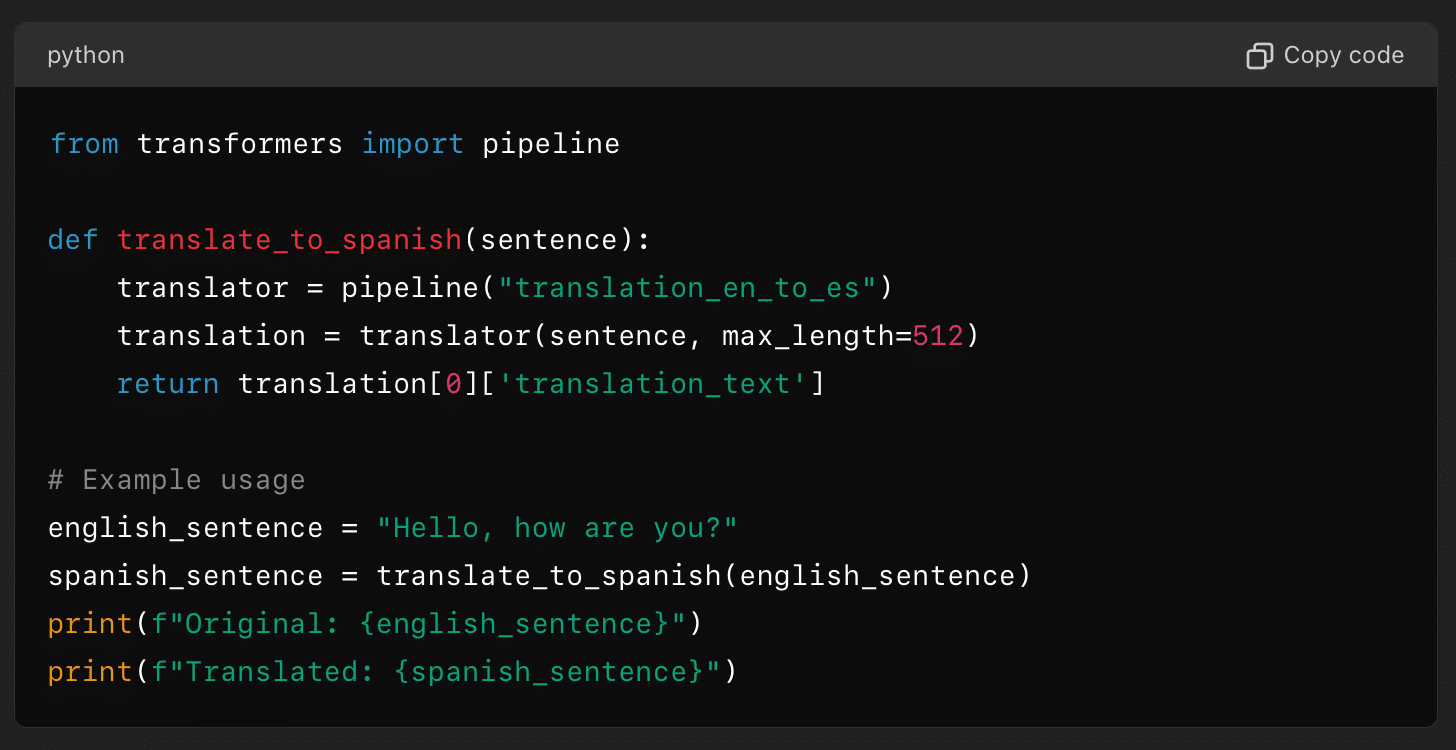

While the above output is perfectly fine, there is yet another cleaner way to do translation using the pipeline function in the transformers library. We asked GPT4-o to use the pipeline function and this time it produced a seemingly-valid and clean code shown below:

But if you try to execute the code, you’ll get the following error message:

ValueError: The task does not provide any default models for options (‘en’, ‘es’)

The bug is a very minor one:

pipeline(“translation_en_to_es”) should be changed to

pipeline(“translation”, model=’Helsinki-NLP/opus-mt-en-es’)

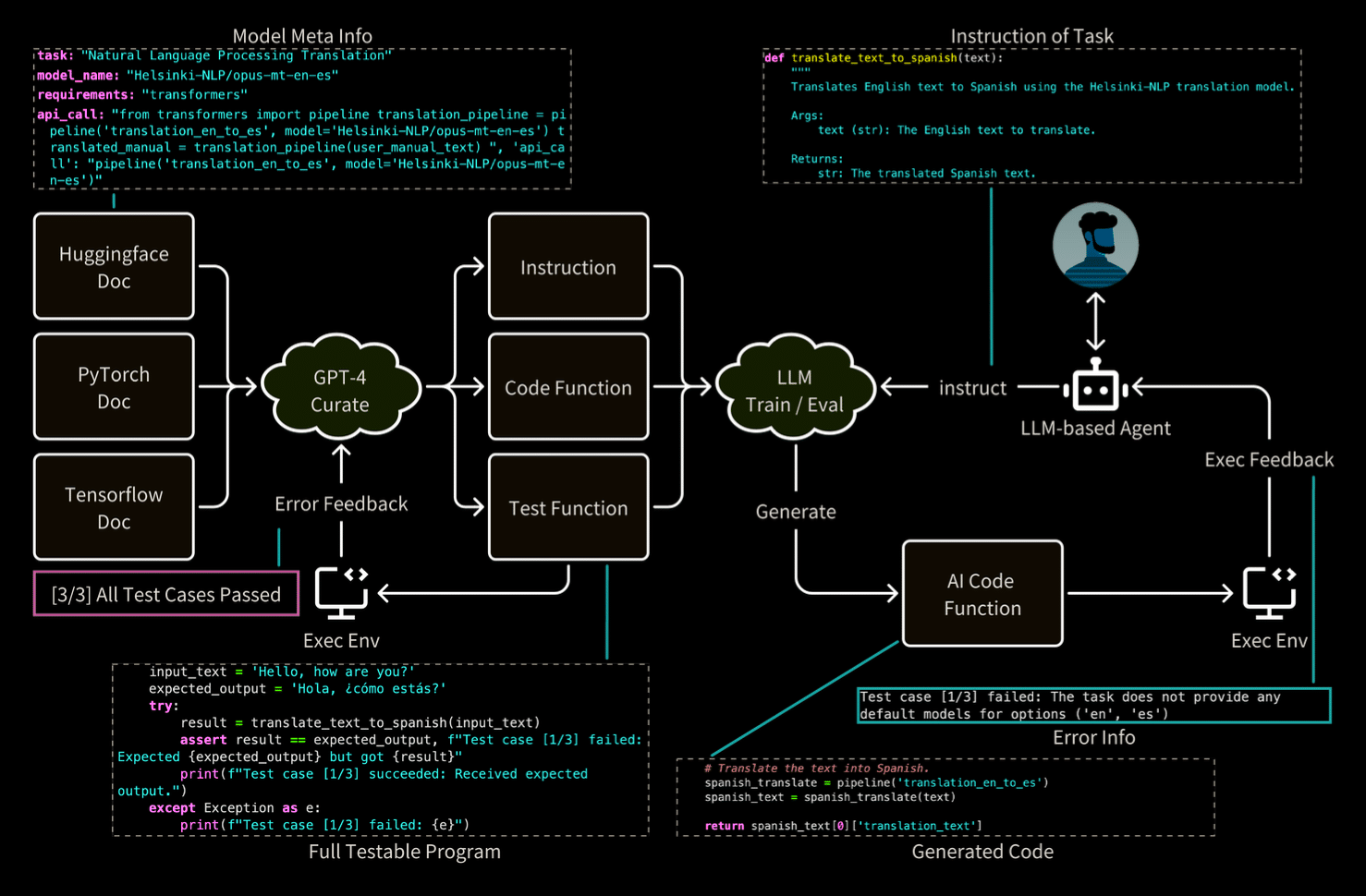

However, if you go through the documentation of the pipeline function, you’ll realize that the above code would have worked if we were trying to translate to French instead of Spanish because the library has some default model assigned for the pair English to French. Edge cases like these can never be solved by making changes in the pre-training workflow. You need to use fine-tuned models for domain-specific tasks. Fine-tuning is not the challenge. The real challenge lies in continuous fine-tuning in an automated way because libraries keep evolving and there are too many domains to keep track of. For example, you can put all the artificial intelligence libraries of Python into one domain. Similarly, all the statistics-related libraries of Python could be another domain. We can have 10+ domain-specific fine-tuned models for just Python language. The good news is that we don’t need complete fine-tuning, Parameter-efficient fine-tuning would suffice. But how do we automate the fine-tuning workflow? Xia et. al. (2024) propose an agentic framework (CodeGen) to solve this exact requirement:

This framework comprises two integral components. On the left side, training data is produced by analyzing library documentation with provided document data (model meta-information). This data, which includes testable programs, is subsequently validated within an execution environment. We then utilize this data to train (fine-tune) an LLM. On the right side, an LLM-based agent is employed to direct the code generation process. Actual executable environments are utilized to push feedback to both the agent and the LLM, aiding in the refinement of the generated code. The fine-tuned LLM yields you the right LCM for that domain.

Cross-file code-debugging aka Autonomous Program Repair (APR)

Similar to code generation, debugging is also a crucial component in programming, consuming 35-50% of the development duration and 50-75% of the total budget (McConnell, 2004). This explains the recent surge of interest in this field, specifically after the funding of Cognition Labs (the startup behind Devin). Although Devin is closed source, there is a lot of activity in open-source space as well:

- SWE-Agent (11.7K Stars): SWE-bench was one of the first code-debugging benchmarks built on top of natural language instructions from GitHub pull requests. SWE-Agent was built by the makers of SWE-bench.

- AutocodeRover (2.3K Stars): This paper which was released in mid-April 2024 had dual objectives. They realized that bug fixing and feature addition are the two key categories of tasks that a development team may focus on when maintaining an existing software project. Their main contribution was in creating advanced tools that aid in retrieving the right code snippets that get used during LLM calls.

- Aider (11.7K Stars): This is another similar open-source project that is recently trending on GitHub. They also use efficient repository maps which helps in better context retrieval.

However, there is one common pattern between all the above-mentioned players – none of them are using their proprietary LCMs. They are all relying on popular LLMs like OpenAI’s GPT-4o and Claude’s Opus-3, after witnessing how LCMs can impact code generation. We at SuperAGI strongly believe that LCMs will have a big role to play in Autonomous Debugging as well. The main challenge of creating an LCM for debugging is the lack of training data. In other domains, researchers have overcome this bottleneck by using SOTA LLMs to create synthetic data. Recently, a paper named DebugBench proved the feasibility of this approach.

Now the next question is how will the training of LCMs for code debugging differ from training for code generation. We can borrow some old but innovative ideas from landmark papers like ELECTRA. We’re quoting the main insight of this paper below:

“Masked language modeling (MLM) pre-training methods such as BERT corrupt the input by replacing some tokens with [MASK] and then train a model to reconstruct the original tokens. While they produce good results when transferred to downstream NLP tasks, they generally require large amounts of compute to be effective. As an alternative, we propose a more sample-efficient pre-training task called replaced token detection. Instead of masking the input, our approach corrupts it by replacing some tokens with plausible alternatives sampled from a small generator network. Then, instead of training a model that predicts the original identities of the corrupted tokens, we train a discriminative model that predicts whether each token in the corrupted input was replaced by a generator sample or not. Thorough experiments demonstrate this new pre-training task is more efficient than MLM because the task is defined over all input tokens rather than just the small subset that was masked out. As a result, the contextual representations learned by our approach substantially outperform the ones learned by BERT given the same model size, data, and compute.”

Finding the buggy pieces of code is very similar to predicting the corrupted tokens in natural language. This is just one of the ideas that demonstrates that it makes sense to train LCMs for code debugging.

Conclusion

Automating software engineering tasks has long been a vision among software engineering researchers and practitioners. While we admit that there is enough progress yet to be made by just using vanilla LLMs. But it is also very clear that the last mile of this problem will require deep expertise in training (or fine-tuning) proprietary LCMs. The open-ended question is what is the right time to invest in LCMs and what portion of your capital and time should go there?