Introduction

The year is 2030. The latest company to get listed on NASDAQ has just 2 employees. There is a CEO, and a CTO and they are supported by a team of over a thousand agents. That seems quite dystopian right? Agreed. Whether AI Agents are going to augment human productivity (and hence the ROI on hiring human employees) or they are going to replace the human workforce – we don’t know yet! However, one thing is quite clear: the ratio of AI agents to human employees in any organization is going to increase by at least 100x. With that being said, let’s talk about what functions are going to become the beachheads for these AI Agents (or you can even call them AI Colleagues).

In 2022, we saw companies like Jasper emerge in the Content Writing space. Then in 2023, we saw some companies breaking out in the Enterprise Search space. In 2024, as the LLMs became more powerful, we are now seeing a bunch of companies scaling into the AI Sales Agent space. But what is the common thread between these three spaces? All of these tasks are “self-contained”. For example, one search query is a one-off task where the task output is generally independent of the broader context in which the user is making that search query. A good trick for checking if a task is “self-contained” or not is to ask yourself – “Can I outsource this to an intern?” This question also makes an indirect claim that AI Agents that are deployed today are not good enough replacements for full-time human employees. But what is the difference between a full-time employee and an intern? It’s mostly the organizational context. Interns don’t have context.

2025 will be the year when AI Agents expand beyond “self-contained” tasks and can drive large projects end-to-end. However, to keep the discussion more focused, let’s talk about just one function – software development. It is a huge market – much larger than just what we’ve historically seen in developer tooling. Tools like Github Copilot significantly enhance the productivity of the developers. But why haven’t we seen a tremendous increase in the throughput of software consultancy firms? Because developers are still present in the value chain of building software. If we draw a crude parallel with Ford’s Assembly Line, then adding the GitHub Co-pilot is not reducing the no. of stations in the assembly line, it’s merely making every station more efficient. The end-to-end automation development wave that is going to hit us in the near future will be akin to a huge 3D printer capable of producing cars at a much faster rate than traditional assembly lines.

Now that we have some imagery to describe the quantum of impact which GenAI is going to bring with end-to-end autonomous development, it’s time to dive deeper. In this blog, we will try to answer three questions:

- Why is autonomous development unsolved yet? What are the major challenges?

- What are the different approaches for autonomous development?

- What are their pros and cons? And, which approach seems more promising?

Challenges of end-to-end autonomous development

We started this blog with the concept of “self-contained” tasks. Today, SOTA models like GPT-4 are quite adept at solving “self-contained” coding tasks. Let’s understand with some examples:

- Github Copilot was launched back in 2021 and the initial offering was mostly around generating pieces of code. Partial code generation requires relatively little context because you do not have to think about how the entire project is organized and how different pieces will interact with each other. You just have to solve a very small isolated problem that the user has asked you to do.

- Then came a lot of point solutions like writing test cases, generating documentation, etc. While code generation was too upstream, testing and documentation are too downstream! Did you notice the pattern? Gen-AI-based solutions were able to make an early impact in peripheral areas of software development. These peripheral tasks are “self-contained”!

Challenge 1: Having the right context

That brings us to the first challenge of E2E autonomous development: Understanding the whole project – or, Having the complete context. The word “context” may trigger a quick and dirty solution in your mind – LLMs with large enough context size to ingest the entire codebase in a single call. There are multiple loopholes in this solution:

- Larger Context Windows lead to a drop in performance: In a paper from Stanford titled “Lost in the Middle,” it is shown that state-of-the-art LLMs often encounter difficulties in extracting valuable information from their context windows, especially when the information is buried inside the middle portion of the context.

- The problem of unwanted imitation: When using the model to generate code, typically we want the most correct code that the LM is capable of producing, rather than code that reflects the most likely continuation of the previous code, which may include bugs.

- A big part of the context exists outside the codebase – in Jira Issues, PRDs, etc.

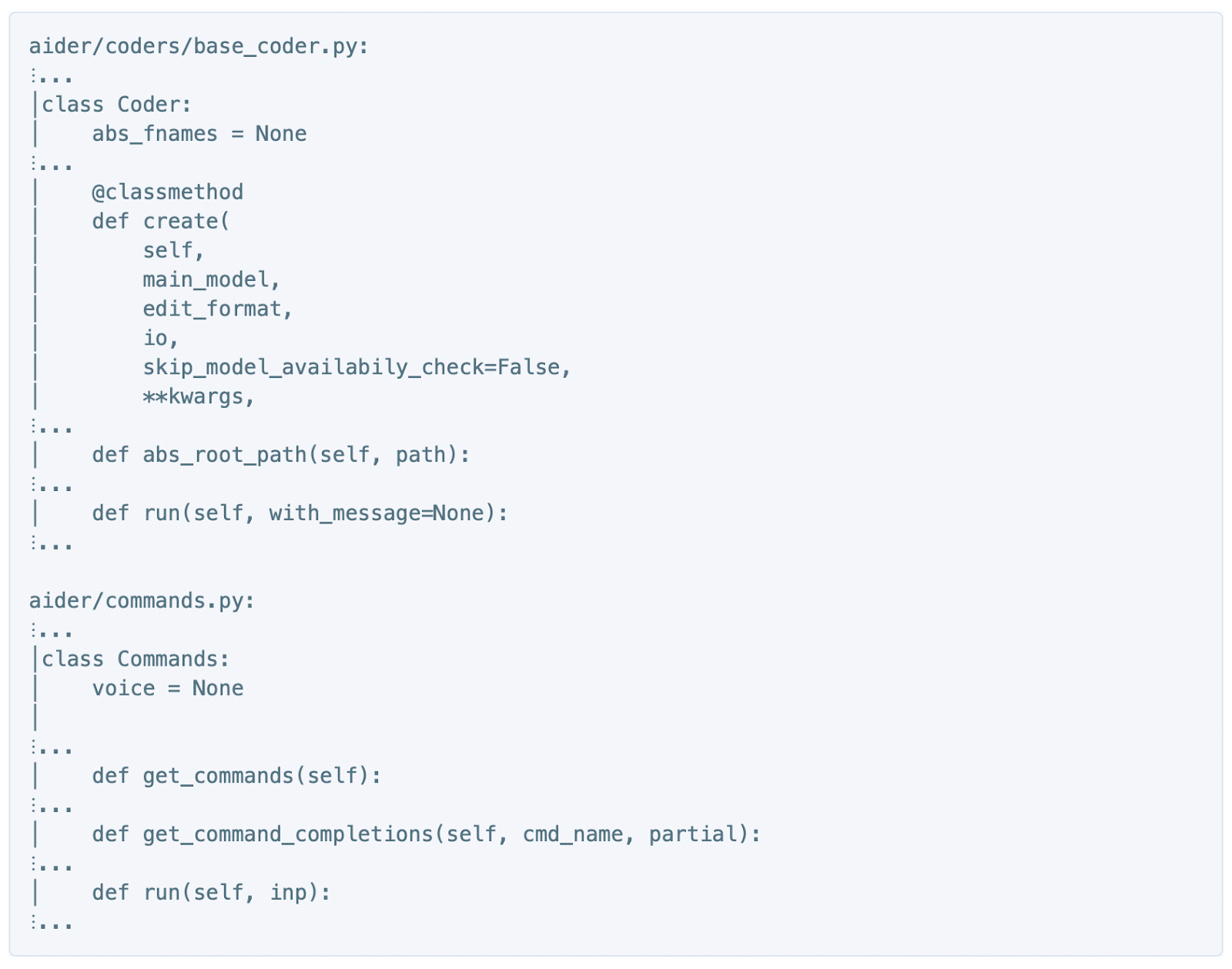

Therefore, this problem needs a smarter solution than feeding in the raw codebase in its entirety. One solution which is getting more acceptance is feeding in the repository map which is a distilled representation of the entire repository. A sample repository map looks like this:

Having-the-right-context

As we see, we are trying to capture essential details only rather than copying the entire codebase. This solves for all the first two loopholes mentioned above:

- A smaller context window is sufficient as we are not sharing the entire code.

- Since we are not sharing the entire code, the existing bugs are also not getting fed into the LLMs.

Better documentation can solve the third loophole. If we are creating more descriptive documentation within the code itself, then we can feed the documentation along with the repository map which LLMs can use to understand the product from user perspective.

Challenge 2: Personalized code generation and Inverse Scaling

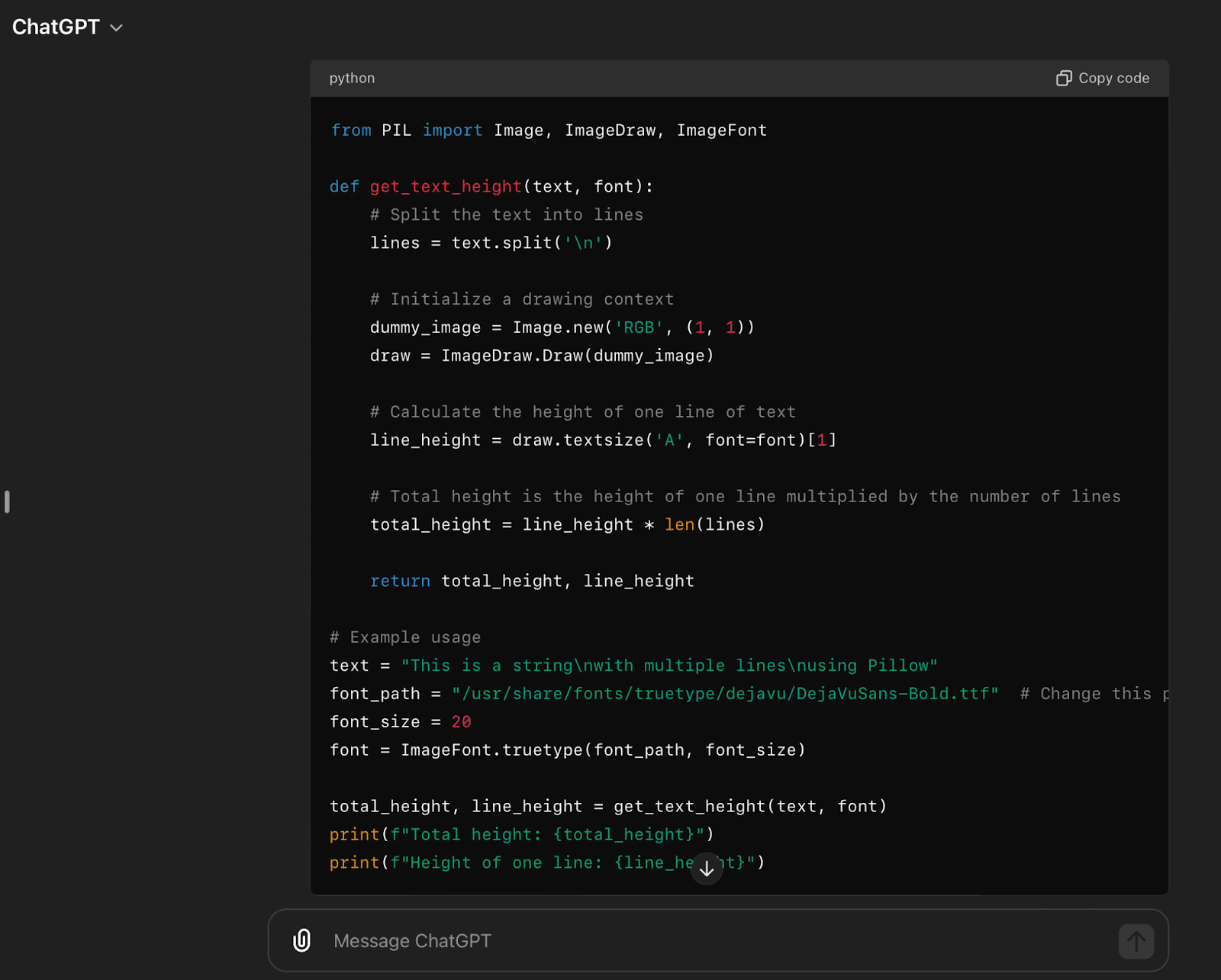

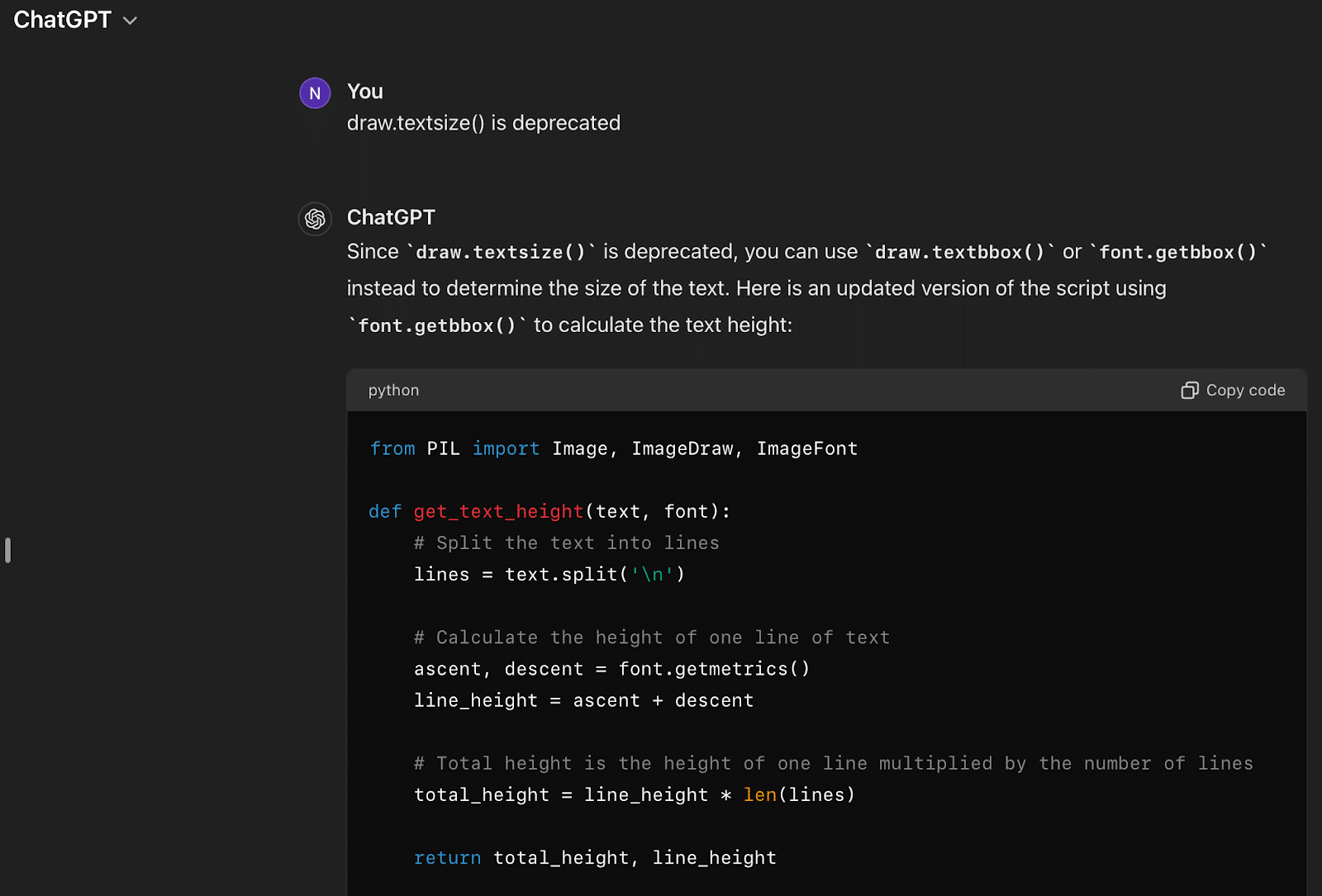

LLMs are great at suggesting the most acceptable solutions because that solution was more frequently observed in the training dataset. But sometimes, the most acceptable solution may not be the correct solution. For example, in a world where APIs keep getting depreciated regularly, LLMs are at a natural disadvantage because they have been trained more on the depreciated APIs than the latest APIs. See the following screenshot where GPT-4o was asked for a simple script to determine the row height of a string with newlines in PIL (an image library in Python):

The problem with this output is that draw.textsize() is deprecated in the latest version of PIL and it got replaced with draw.textlength(). But one can understand why most LLMs will use the deprecated function.

Another reason for the most acceptable solution not being the correct solution could be the coding style. Maybe your organization believes in a less popular design paradigm. How can you ensure that the LLM follows that design paradigm? I’ve heard customers tell me ‘I wish a company could fine-tune their model securely on my codebase’. While tuning a model to your code base might make sense in theory, in reality, there is a catch: once you tune the model it becomes static unless you are doing continuous pre-training (which is costly).

One feasible solution to overcome this problem is RAG (Retrieval Augmented Generation): where we retrieve the relevant code snippets from our codebase, which can influence the generated output. Of course, RAG brings the typical challenges associated with retrieval. One must rank the code snippets based on three parameters: Relevance, Recency, and Importance. ‘Recency’ can solve the code depreciation issue and ‘Importance’ can give higher weight to frequently observed design patterns in your codebase. But getting the right balance between these three dimensions is going to be tricky. Even if we master the retrieval, there is no guarantee that the LLM will use the retrieved code snippets while drafting the response. One may think that this is less of an issue if we are using larger models. However, in reality, it’s quite the opposite and this phenomenon is called Inverse Scaling.

LLMs are trained on huge datasets and hence they are less likely to learn newer environments that are different from the normal setup. Due to inverse scaling, larger models are more likely to ignore the new information in the context and rely more on the data that they have seen earlier.

Challenge 3: The Last Mile Problem

Around mid-2023, we started seeing some of the cool demos of agents who can do end-to-end development. The Supercoder from SuperAGI and GPT-Engineer were some of the early players in this area. However, most of these projects were trying to create a new application from scratch. In other words, they were solving for the first mile. Creating a new application looks cooler than making incremental changes, but the latter is the more frequent use case and hence it has a larger market size. These projects were aimed at creating from scratch because it is an easier use case, as the LLMs are free to choose their own stack and design paradigms. However, in 2024, we are seeing startups that are more focused on making changes/additions to existing applications. The reason this is happening so late is that it was simply not possible for LLMs to understand a huge codebase and build on top of it. But today, we have smart models with large enough context lengths, and we also have techniques like RAG and repository maps to aid in code generation in existing projects. But all of the above still doesn’t guarantee that the incremental changes made to the codebase will work on the first try.

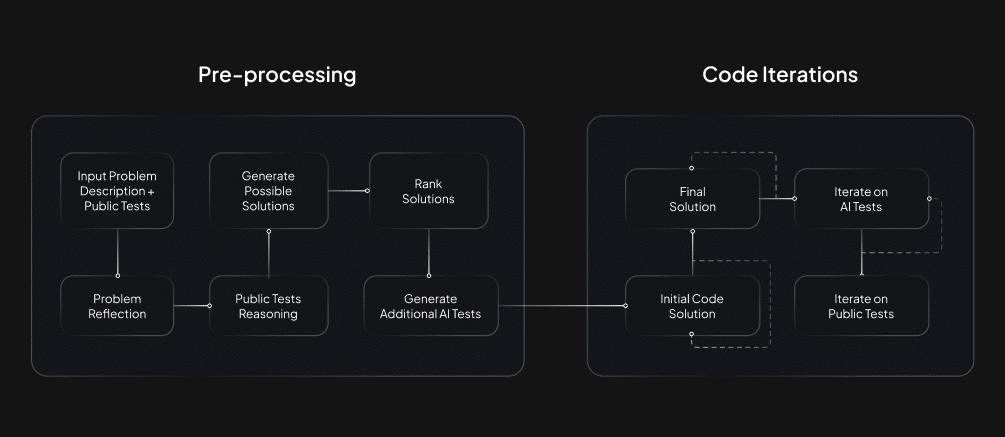

An interesting idea (reiterated by Andrej Karpathy here) is around the concept of flow engineering, which goes past single-prompt or chain-of-thought prompt and focuses on the iterative generation and testing of code.

Flow engineering will need a feedback loop from code execution. To illustrate this let’s consider the same code depreciation example, mentioned in the previous section. When the AI agent tries to execute the code generated by GPT-4o, it may work if the environment has an older version of PIL. Otherwise, there will be an output in the terminal saying that draw.textsize() is deprecated. Now, the LLM will come up with a workaround as shown in the image below.

However, the LLM is still not suggesting the ideal solution which uses the function draw.textlength(). This is the inverse scaling problem which we covered in the previous section. But if the agent had access to the web, then it can do a google search which will lead it to a stackoverflow page where it will get the right alternative for the deprecated function. This shows how a closed ecosystem can create a feedback loop with the help of tools like terminal, browser and IDE. We call this reinforcement learning from agentic feedback. An agent can leverage this feedback loop to create projects from scratch as well as to make incremental changes in existing projects.

So far, we’ve highlighted three major challenges and their possible mitigations. Notice that our ideal solution is taking the shape of agentic system which uses tools like terminal, IDE and browser along with some core components like RAG and Repository maps. Are agents the enduring answer to end-to-end autonomous development or do we need something more – maybe a code-specific LLM? Let’s explore some alternative approaches in the next section.

Are agents the enduring solution to Autonomous Development? Or do we need code-specific models?

Theoretically, using a code-specific model makes sense, especially when we are trying to optimize for production-ready solutions which must have low latency. When the domain is limited, smaller models have always been able to match the performance of general models. The reason why very few players are going for this approach is two-fold:

- Empirically, we have seen successful apps like Cursor and Devin which are built on top of generic GPT models, not code-specific models.

- Training a new model is a capital intensive task. The core question here is whether a new team can out-pace frontier model improvements. The base model space is moving so fast that if you do try to go deep on a code-specific model, you are at risk of a better base model coming into existence and leapfrogging you before your new model is done training.

Apart from efficiency and latency, what can code-specific models bring to table? It has to be quality, otherwise there is no case for using code-specific models as generic models will keep getting efficient with time. But what if we can ensure quality by using better techniques than training a code-specific model? That is exactly what we at SuperAGI are doing with SuperCoder 2.0. We are taking a very opinionated approach to software development where we are building coding agents which are optimized for opinionated development stacks. For example, today most of the frontend-engineering is happening in Javascript (another popular choice for frontend is flutter). But within Javascript, there are multiple libraries which used to be popular once but are no longer relevant. One such example is AngularJS which came from Google. Currently only three stacks (Vue, React, and Next) are popular. To match human level output, we are building deeper integrations with popular stacks. For example, for backend projects we are not supporting all stacks. If you want your backend written in python, we will build it using FastAPI which is the most popular python stack nowadays.

Conclusion

But a lot of founders believe that to build a long-term moat in the code generation, your agentic framework must be powered by your own code-specific models. We agree with this thought process. The only little caveat is from the strategy side. Is it the right time to invest in building your own model? Probably not, because the generic models are getting better at a good enough pace. However, this pace is slowing down. GPT-4-turbo which was released this April is not significantly better than the GPT-4 preview which came almost a year back. So, maybe the time to train your own model is coming. However, the bottomline is that startups must extract advantages from low-hanging fruits like Flow-engineering because they are more capital efficient ways to deliver value to the end-user. When one has exploited all the low-hanging fruits then it makes sense to train Large Coding Models (LCMs). We will be soon publishing a blog on LCMs, so stay tuned!