Introduction

SuperAGI is focused on developing Large Agentic Models (LAMs) that will power autonomous AI agents. As part of this effort, we have been working on enhancing multi-hop sequential reasoning capabilities. We are excited to share our early breakthroughs in the quest towards AGI.

Introducing SAM (Small Agentic Model), a 7B model that demonstrates impressive reasoning abilities despite its smaller size. SAM-7B has outperformed existing SoTA models on various reasoning benchmarks, including GSM8k and ARC-C.

Our key contributions are as follows:

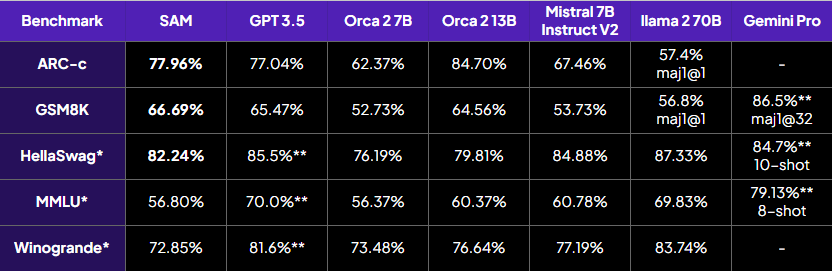

- SAM-7B outperforms GPT 3.5, Orca, and several other 70B models on multiple reasoning benchmarks, including ARC-C and GSM8k.

- Interestingly, despite being trained on a 97% smaller dataset, SAM-7B surpasses Orca-13B on GSM8k.

- All responses in our fine-tuning dataset are generated by open-source models without any assistance from state-of-the-art models like GPT-3.5 or GPT-4.

Although the objective of this exercise was to develop reasoning capabilities, the same process can be utilized to impart any desired skillset to base LLMs.

The models have been evaluated using SuperAGI’s custom evaluation pipeline, which ensures a fair comparison for all models. The evaluation pipeline has been developed for the ARC-C and GSM8k benchmarks. For other benchmarks, we have utilized the LM harness, the same library that is employed by the Open LLM leaderboard of HuggingFace.

The reasoning and CoT (Chain of Thought) benchmarks of Mistral made it the perfect choice for us to experiment and further improve the reasoning capabilities of Mistral 7B. However, the curated dataset plays a pivotal role in improving the reasoning capability of any LLM.

We are making our model and dataset publicly available for research. You can test and use it here.

Key insights

Recent research suggests that strategically curating data leads to improved reasoning, rather than solely focusing on increasing the model size. The fact that SAM-7B can outperform GPT-3.5 supports this claim.

To build Large Agentic Models (LAMs), exceptional reasoning and planning capabilities are required. One of our key findings is that including explanation traces in the fine-tuning dataset improves reasoning capabilities. Here are the key highlights of our findings:

Challenge 1: Lack of large high-quality datasets for reasoning

- Quality of data is of utmost importance, if quality is refined we need less data.

- However, quality is not everything. The balance between quality, diversity, and quantity of data is quintessential. Our objective was to enhance reasoning capacity through fine-tuning and explanation. To accomplish this, we utilized open-source LLMs as a teacher network to fine-tune a smaller LLM (Mistral-7B).

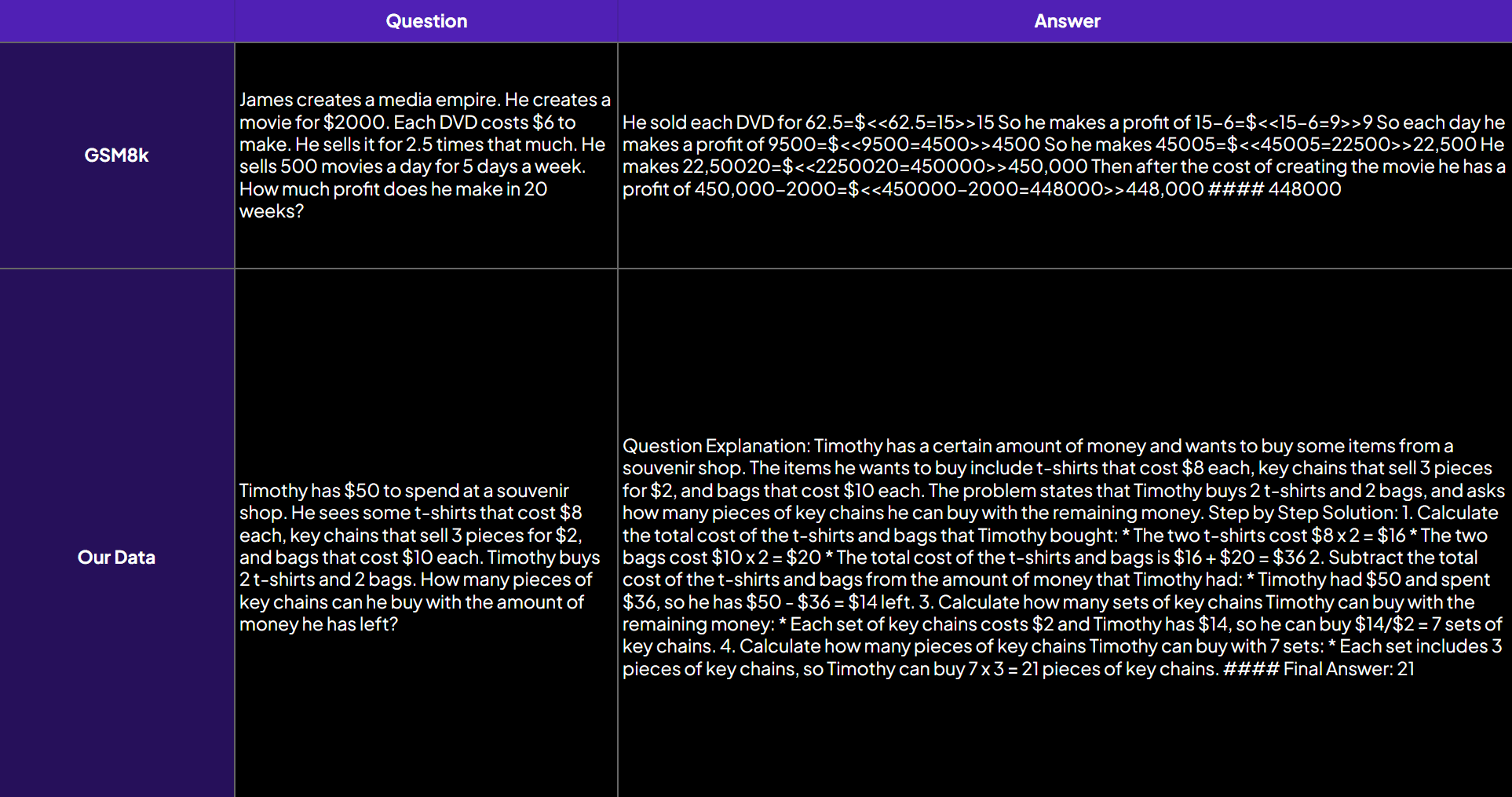

- During the creation of the reasoning dataset, it is important to ensure that we identify the correct traces and that the data meets our standards. This includes avoiding smaller traces, inaccurate reasoning, and incorrect breakdowns. The Chain-Of-Thought reasoning in the dataset should adhere to the BSS principle, which stands for Breakdown – Steps – Solution.

- To introduce diversity during generation, we conducted a “Temperature sweep” of explanations. For each row in our dataset, we generated an explanation trace using a random temperature value between 0.1 to 0.9.

Here’s a table showcasing sample rows of GSM8k dataset and our dataset.

- We achieve benchmark-level performance with explanation traces generated by open source LLMs using Reasoning Skeleton Prompt. This prompt helps us generate explanations in breakdown-solution-answer format and also promotes diversity in the explanation traces, ensuring both creativity and control. This approach shows promise as we explore the use of open-source LLMs as reasonable teachers. The key learnings are as follows:

- Knowledge distillation through open source LLM is not controlled. With our prompt, we get more control which ensures the quality of data.

- Our prompt ensures consistency while generating CoT (Chain-of-Thought) reasoning, ToT (Tree-of-Thought) reasoning, and detailed breakdown.

- We also found that ToT requires larger explanation traces which we need to explore further.

- Sequential and Multi-Hop reasoning

- Reasoning must be performed in multiple steps as well as in the correct sequence.

- Smaller model can imitate but they find it hard to reason like larger LLMs.

- However, smaller LLMs benefit from a detailed sequential breakdown when generating an answer. We have found that providing a detailed breakdown of reasoning traces helps smaller LLMs become more stable reasoners.

Challenge 2: Generating Synthetic Data without using SOTA models like GPT-3.5/4

- We conducted a more detailed exploration of generating explanation traces from open-source LLMs – Falcon 180B, Yi-34b Chat, and Mixtral.

- We found that while it is possible to generate high-quality explanation traces from these open-source LLMs, it requires a rigorous data-cleaning process that is not necessary when generating data from GPT-X.

- For the question we generated the Explanation traces by following this prompt :

"""You are an expert reasoner and teacher. You will follow these instructions:\\n

1)Given question and options. \\n

2)Explain the question in detail.\\n

3)Come up with detailed step by step solution for your answer.\\n

4)Finally Always give answer label like this #### Final Answer : <write the answer label here>\\n

"""

- We used the variations of this prompt to generate the rationales for the questions. While generating traces for GSM8k we will use “You are an expert mathematician and teacher”

- To include a diversity of generation and traces for each generation we took random temperature values in the range [0.1→0.9]. for each subset of the training question, we generated responses using all three models.

- We had to discard multiple explanations that did not meet our standards. Due to occurrences of non ASCII characters, incorrect responses, strings that were not relevant, and hallucinated texts, among others. We matched the responses against the correct answers so that we didn’t feed incorrect reasoning traces to the model.

Challenge 3: Creating a custom pipeline for evaluating models

- One of the main challenges in evaluating LLMs is the lack of a standardized and objective pipeline. There have been differences in the prompts used in the paper (Orca) and the LM evaluation harness. Another major issue is the parsing of the output, where even when it is correct, it is not properly marked due to the output format.

- Each model is optimized for a specific set of prompts and hyperparameter settings. In the case of Orca2, if we consider the results from the Open Leaderboard on HuggingFace, which uses LM Harness, it is significantly underperforming on GSM8k. This motivated us to create a relatively more objective evaluation pipeline to assess models on GSM8k and ARC-C.

- We prompted the model to output the answer followed by parsable strings like “####”, “Final Answer : ”, “Final answer is : ”and “#### Final answer : ”.

- Using this we write multiple regex to extract the answer and compare them to the golden labels. Using these we saw a dramatic improvement in the performance of Orca2 as well as SAM when compared to LM-Harness.

- This also makes it a worthwhile task to explore further as most of the benchmarks are in MCQ format like Hellaswag, Winogrande, and Truthful QA among others.

Training and Evaluation

SAM-7B was created by fine-tuning the Mistral-7B base model over 52M tokens of data consisting of explanation traces of reasoning data. The model has been LoRA fine-tuned on NVIDIA 6 x H100 SxM (80GB) for 4 hours in bf16.

Number of epochs: 1

Batch size: 16

Learning Rate: 2e-5

Warmup Ratio: 0.1

Optimizer: AdamW

Scheduler: Cosine

The performance of SAM-7B has been measured across multiple benchmarks that evaluate the reasoning capabilities of the model. These benchmarks include the ARC challenge, GSM8k and HellaSwag.

- ARC-Challenge: Evaluating common sense reasoning which is an essential component of Agentic reasoning.

- GSM8k: Sequential multi-hop reasoning which is also essential for agentic task breakdown.

- HellaSwag: Gauges the model’s capability to reason and complete sentences which requires LLMs to understand humans’ notions of the physical world.

These benchmarks show that our model has improved reasoning as compared to orca 2-7b, orca 2-13b and GPT-3.5. Despite being smaller in size, we show better multi-hop reasoning, as shown below:

Note:

*⇒ Evaluated using LM Evaluation Harness

** ⇒ Benchmark values derived from published papers

-⇒ Did not run benchmarks and not available publicly

- Since we use regex to extract the answer, we use maj(1)@k, where k = 8, kept the same for all the models. So we ask the questions 8 times and take the most frequent answer as the majority vote.

- This model is not suitable for conversations and Q&A. It performs better in task breakdown and reasoning.

- The model is not suitable for production use as it lacks guardrails for safety, bias and toxicity.