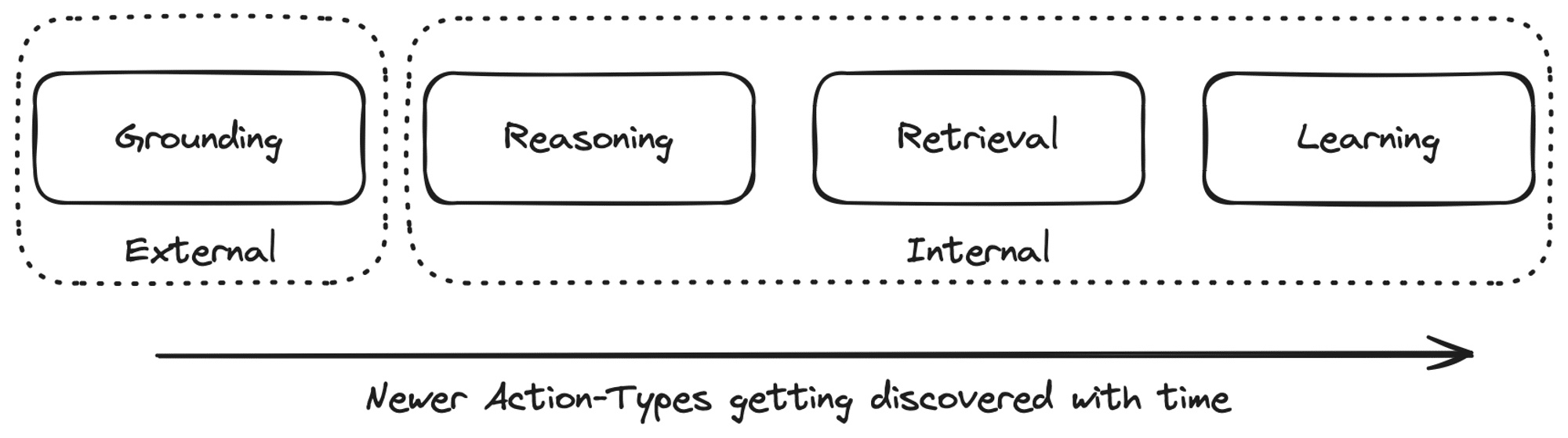

In part-1 of Towards AGI series, we discussed a core component of Agents – Memory. However, the early agent architectures, didn’t have Memory as a first class primitive. As we add new primitives to the ideal agent architecture, we must also adapt the architecture to interact with these components. Here is a take on how Agent Action Space evolves with new incremental additions to Agent Architecture.

The evolution of Agentic Actions

Phase 1: External Actions

The SayCan (Ahn et al., 2022) paper, introduced the fundamental capability of actions. They called it Grounding which is the process of connecting the natural language and abstract knowledge to the internal representation of the real world. In simpler terms, Grounding is about interacting with external world.

It’s easy to assume that all actions involve interacting with the external world. This assumption was the major limitation of SayCan agents. They could perform various actions (551, to be exact, such as “find the apple” or “go to the table”), but all these actions were of the same type: Grounding. However, if we examine human behavior, we’ll notice that many of our actions are internal, including thinking, memorization, recall, and reflection. This insight inspired the next generation of agents, which are equipped with the reasoning capabilities of LLMs.

Phase 2: Internal Actions

The next significant advancement was the ReAct paper (Yao et al., 2022b), introducing a new type of action: Reasoning. The action space of ReAct included two kinds of actions:

- External actions, such as Grounding

- Internal actions, like Reasoning

ReAct, standing for Reasoning + Action, seems to imply that the term ‘Action’ refers solely to Grounding, and Reasoning is not an Action. However, the next generation of agents introduced more internal actions, acknowledging Reasoning as a type of action.

Phase 3: The Four Fundamental Agentic Actions

The latest generation of agent architectures had Long-Term-Memory (LTM) as a first class module. To take full-advantage of LTM they introduced two new actions:

- Retrieval (Reading from Memory)

- Learning (Writing into Memory)

Both these actions are internal, because they do not interact with the external world.

Here is a simple diagram which captures the evolution of Agentic Action-Space.

Phase 4: Composite Agentic Actions

Now, one might wonder whether there are more action types that will be added to the Action Space.

The answer is both “Yes” and “No”. “No”, because most systems, whether human or computer, only have these four fundamental action types. However, “Yes”, because these fundamental action types can be combined to create multiple Composite Action Types. For instance, planning is a composite action type that can be implemented by combining two fundamental actions – reasoning and retrieval.

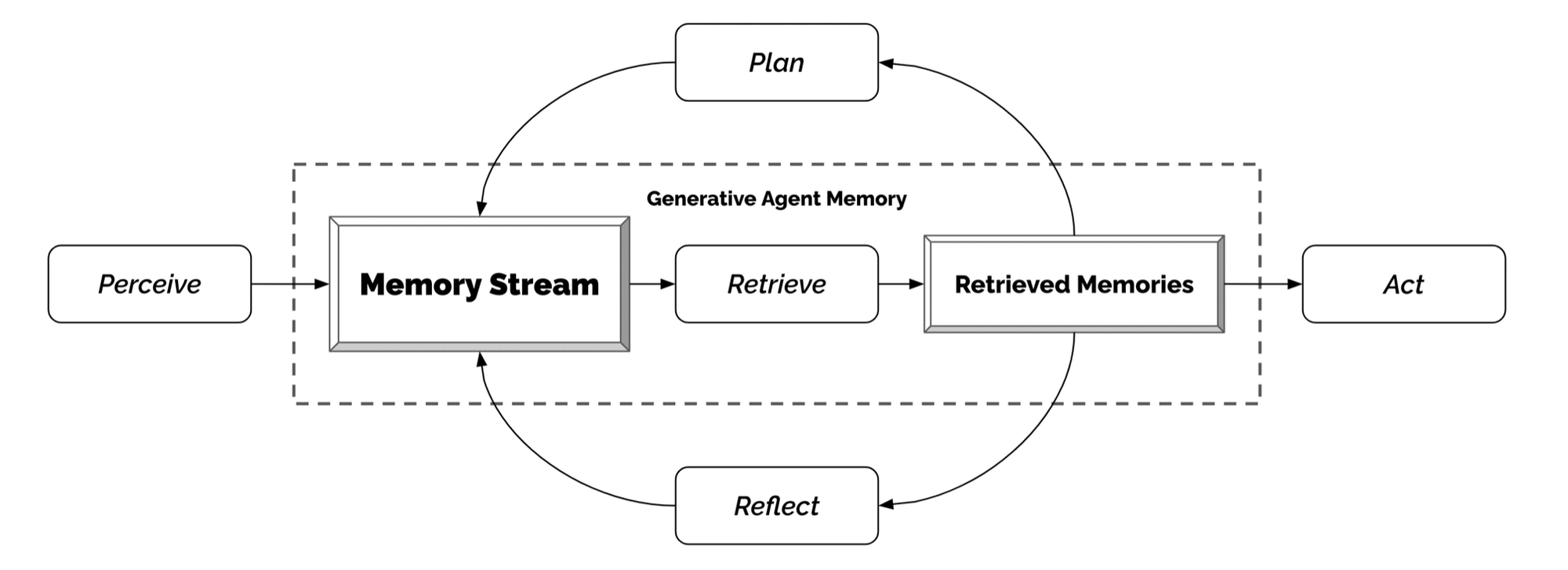

Planning as a Composite Action

In the Generative Agents paper, the agents try to imitate human behaviour. Every interaction with the external world is getting logged into the memory stream. When an agent has to plan its day, it retrieves the past events from its memory (Retrieval) and then calls an LLM (Reasoning) to create the plan. Thus, Planning is a higher order action which leverages two fundamental actions – Reasoning and Retrieval. Now, the question arises – What are the implications of identifying Planning as a new Action type.

Implications of adding new Actions in Action-Space

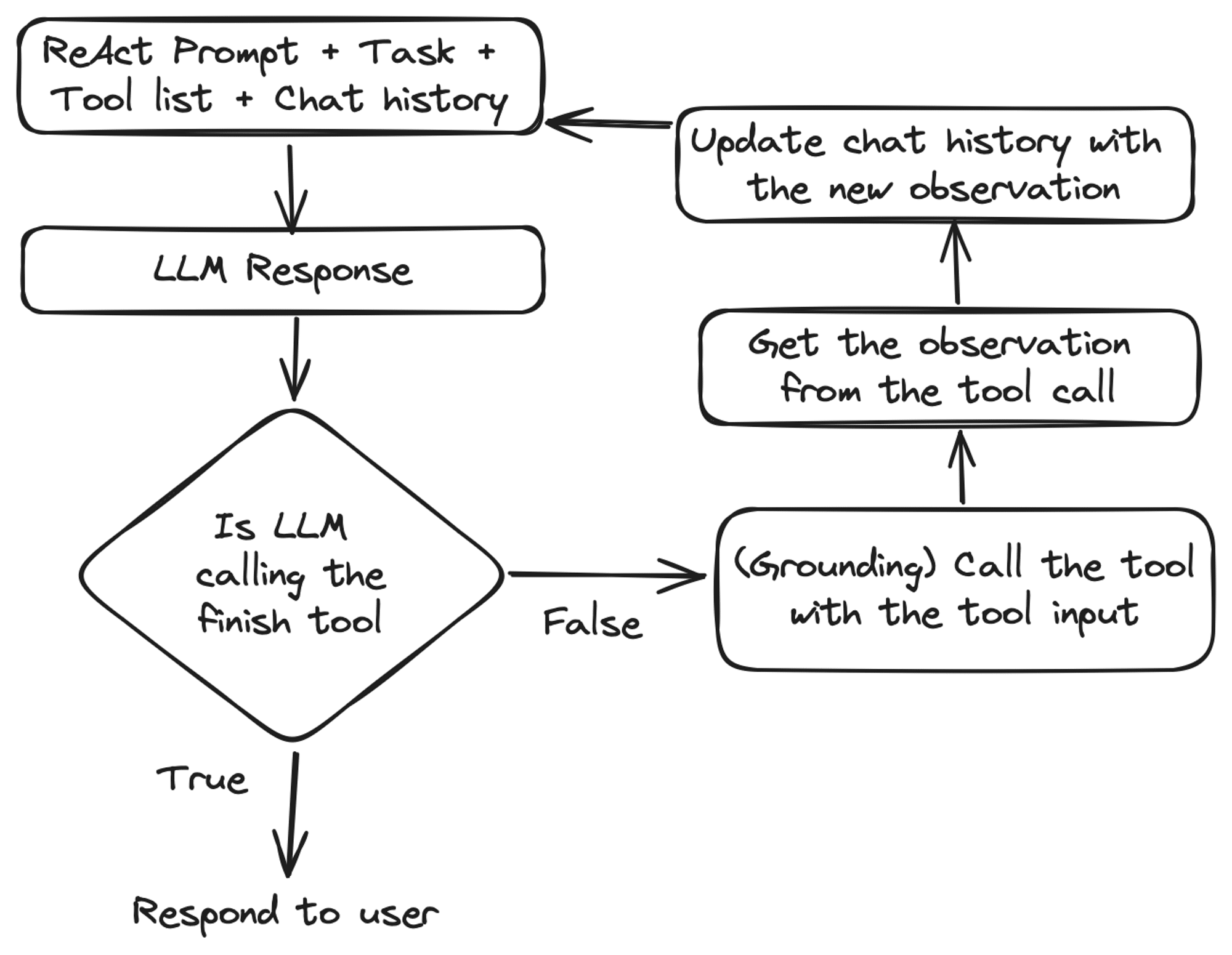

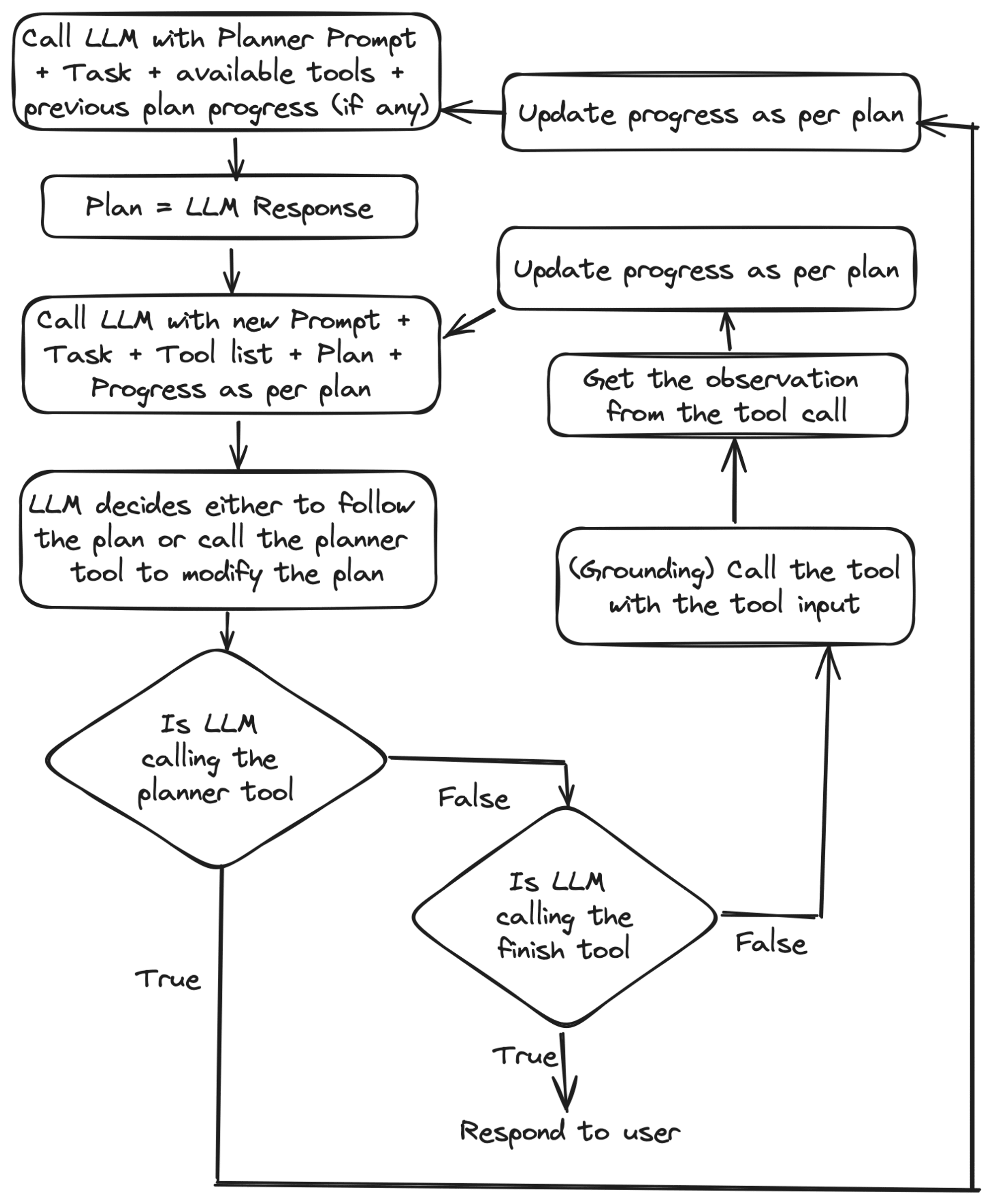

Every time we add a new type of Action in the Action-Space, the execution flow of the agent needs to be modified. To illustrate this, let’s compare two agent designs – a ReAct Agent and a Planner Agent.

Here is the logic of a vanilla ReAct agent:

Compare the above diagram with the following diagram which represents the logic of a Planner based agent:

Notice how the decision-making process, also known as agent design, changes when we incorporate new components, such as planning. Similarly, adding tools to interact with long-term memory will also alter the agent design. Conversely, the inclusion of another tool like a Google search tool won’t modify the agent design, as it’s just another tool for Grounding. In conclusion, if the addition of any tool or capability results in changes to the execution flow, we are most probably introducing new Fundamental or Composite Action types.

Parallel Actions

In the ReAct design, typically only one tool is called at a time. This means when a new event happens, you cannot execute multiple actions (say learning and grounding).

If we draw inspiration from humans, we will realise that humans often take actions in parallel. Whenever we see new information, we use it to decide our next step (reasoning), we remember any similar instance from the past (retrieval), we save this new experience for future reference (learning) and we also interact with the external world (grounding).

Interestingly, OpenAI’s function calling has added support for parallel function calling. MemGPT is one of the few agent frameworks which is trying to leverage parallel function calling in its agent design. It’s also among the earliest frameworks to support long-term-memory. For those interested in delving deeper into memory-related actions (Learning and Retrieval), MemGPT serves as an excellent starting point.

Conclusion & Next Steps

In this post, we examined various types of actions within the Agentic Action Space and the effects of adding new action types on decision-making procedures. In the next installment of the Towards AGI series, we will delve deeper, using actual code to explore innovations in decision-making procedures.