Introduction

In recent years, there has been a notable surge in the utilization of multi-agent systems powered by state-of-the-art Large Language Models (LLMs) to address a wide array of tasks, ranging from academic research to software development. However, the ultimate objective of achieving end-to-end problem-solving capabilities remains a significant challenge in autonomous software development. In pursuit of this ambitious goal, we have developed a multi-agent system leveraging the capabilities of GPT-4o and Sonnet-3.5, which has demonstrated promising results in the domain of autonomous software development.

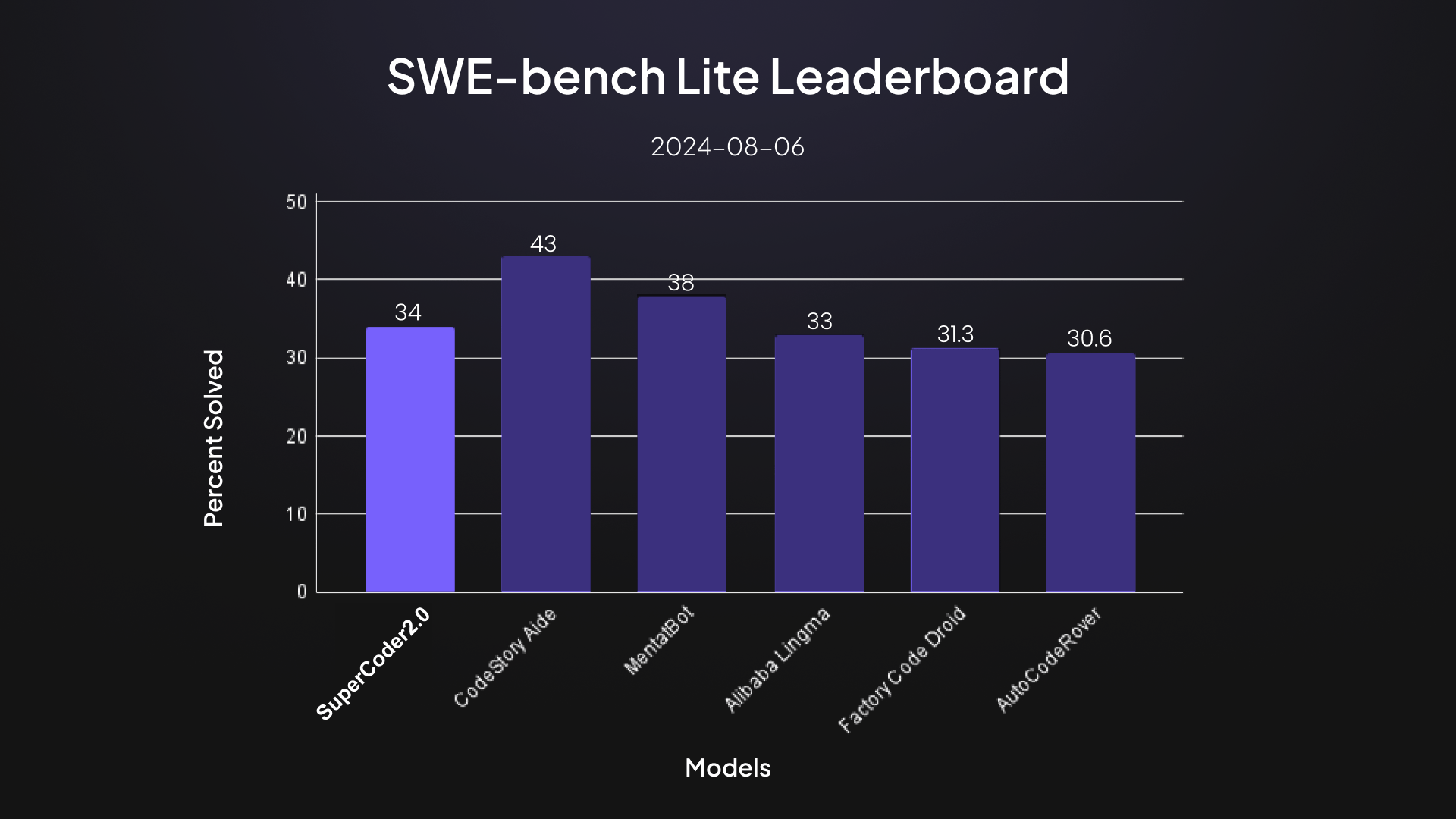

Our system has been rigorously tested on SWE-Bench-Lite, a recently released benchmarking dataset designed to evaluate the efficacy of functional bug fixes in complex real-world issues. SWE-Bench-Lite, comprises 300 problems that are a subset of the larger SWE-Bench dataset. This subset encompasses 12 repositories included in the main dataset, providing a comprehensive yet manageable evaluation framework.

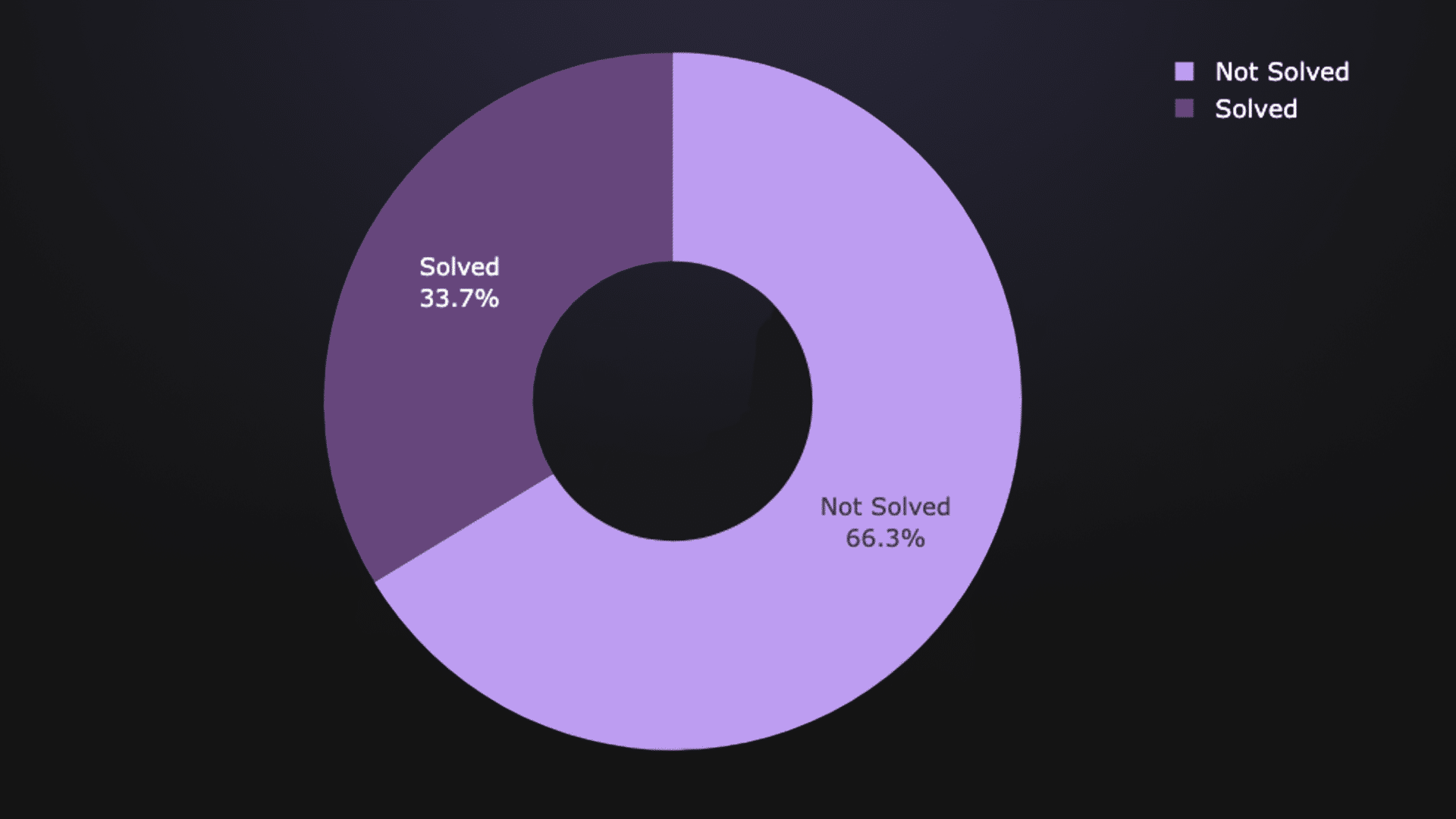

Our multi-agent system has achieved a notable milestone by passing 101 out of the 300 tests present in SWE-Bench-Lite, attaining a performance score of 34% on the benchmark. The primary objective of SWE-Bench-Lite is to assess the ability of systems to provide functional bug fixes based on the given problem statements, making it an ideal testbed for evaluating the capabilities of our multi-agent system.

Problem statement

The benchmark provides a comprehensive framework consisting of a GitHub repository, a problem statement, and corresponding environment setup details, including a commit ID for the base environment and another commit ID for the problem-specific setup. Additionally, it includes test cases categorized as “pass to pass” and “fail to pass.” The primary objective is to take the provided codebase and problem statement as inputs and generate a patch. This patch, when applied to the codebase, should enable the system to pass all the specified test cases, whether they are “pass to pass” or “fail to pass.”

The evaluation process is facilitated by a dockerized setup provided by the benchmark team. This dockerized environment ensures reproducibility of results and significantly simplifies the evaluation process by eliminating the need for manual environment configuration. By leveraging Docker, we can seamlessly set up the required environment for each evaluation, ensuring consistency and reliability in the assessment of the generated patches.

The use of Docker for obtaining reproducible results has greatly streamlined our workflow, alleviating the challenges associated with environment setup and allowing us to focus on the core task of developing effective patches. This approach not only enhances the efficiency of the evaluation process but also ensures that the results are consistent and comparable across different runs and setups.

Solution

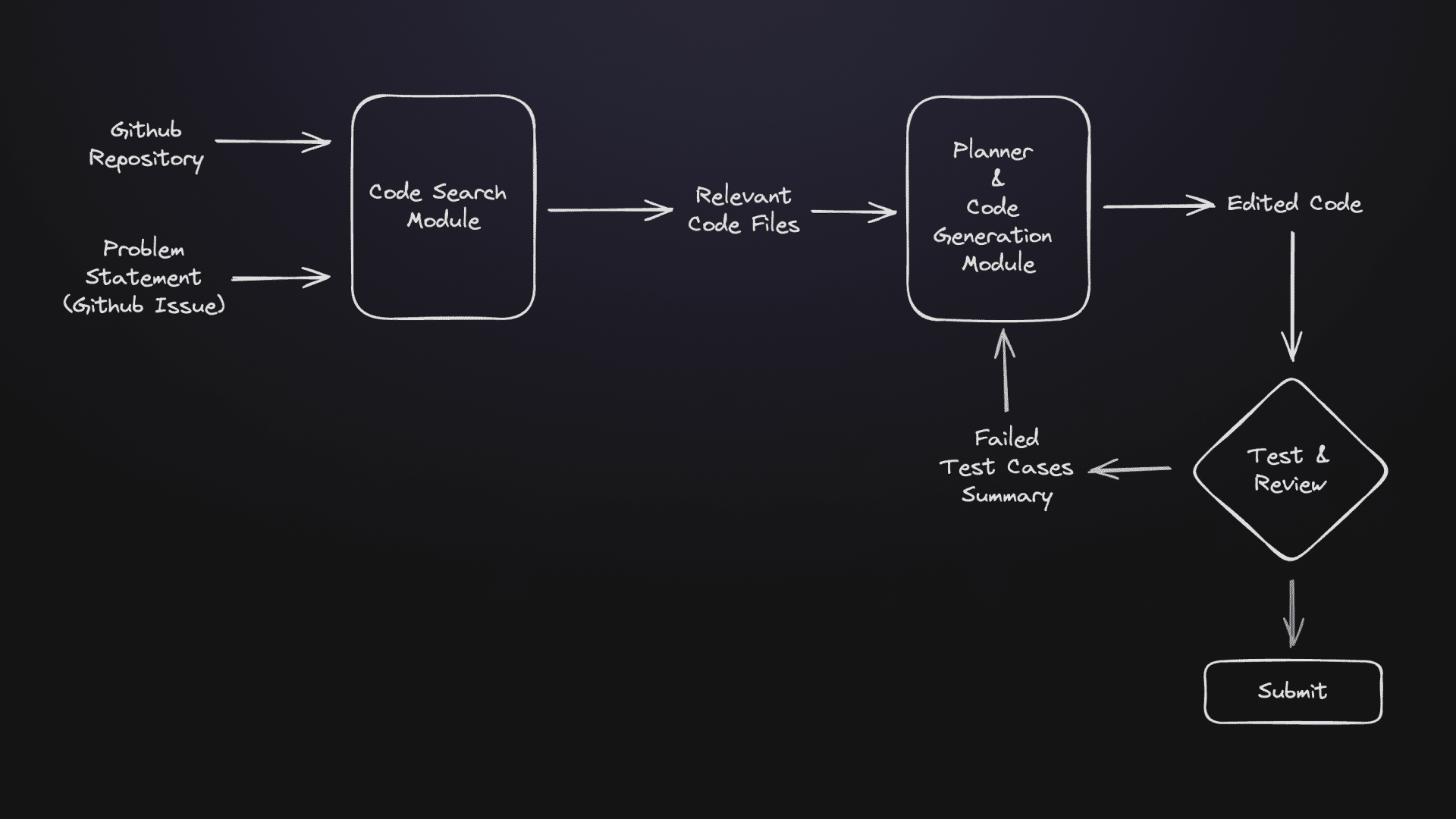

To tackle the problem of finding the correct solution in the given codebase based on the problem statement, we divided our approach into two distinct yet interrelated parts: Code Search and Code Generation.

1. Code Search: The first step involves identifying the relevant sections of the codebase that are pertinent to the given problem statement. This process, termed as Code Search, is crucial for narrowing down the vast expanse of the codebase to a manageable subset that is likely to contain the source of the issue.

2. Code Generation: Once the relevant code sections have been identified through the Code Search process, the next step is Code Generation. This involves creating a patch or a set of modifications that address the problem outlined in the problem statement. The generated code must be syntactically correct and functionally effective, ensuring that it resolves the issue without introducing new bugs. The generated patch is then applied to the codebase and tested against the provided test cases to validate its effectiveness.

By dividing the problem into these two parts, we can systematically and efficiently address the challenges of finding and fixing issues within large codebases. This structured approach not only improves the accuracy of our solutions but also enhances the overall robustness and reliability of our multi-agent system.

SuperCoder’s Architecture

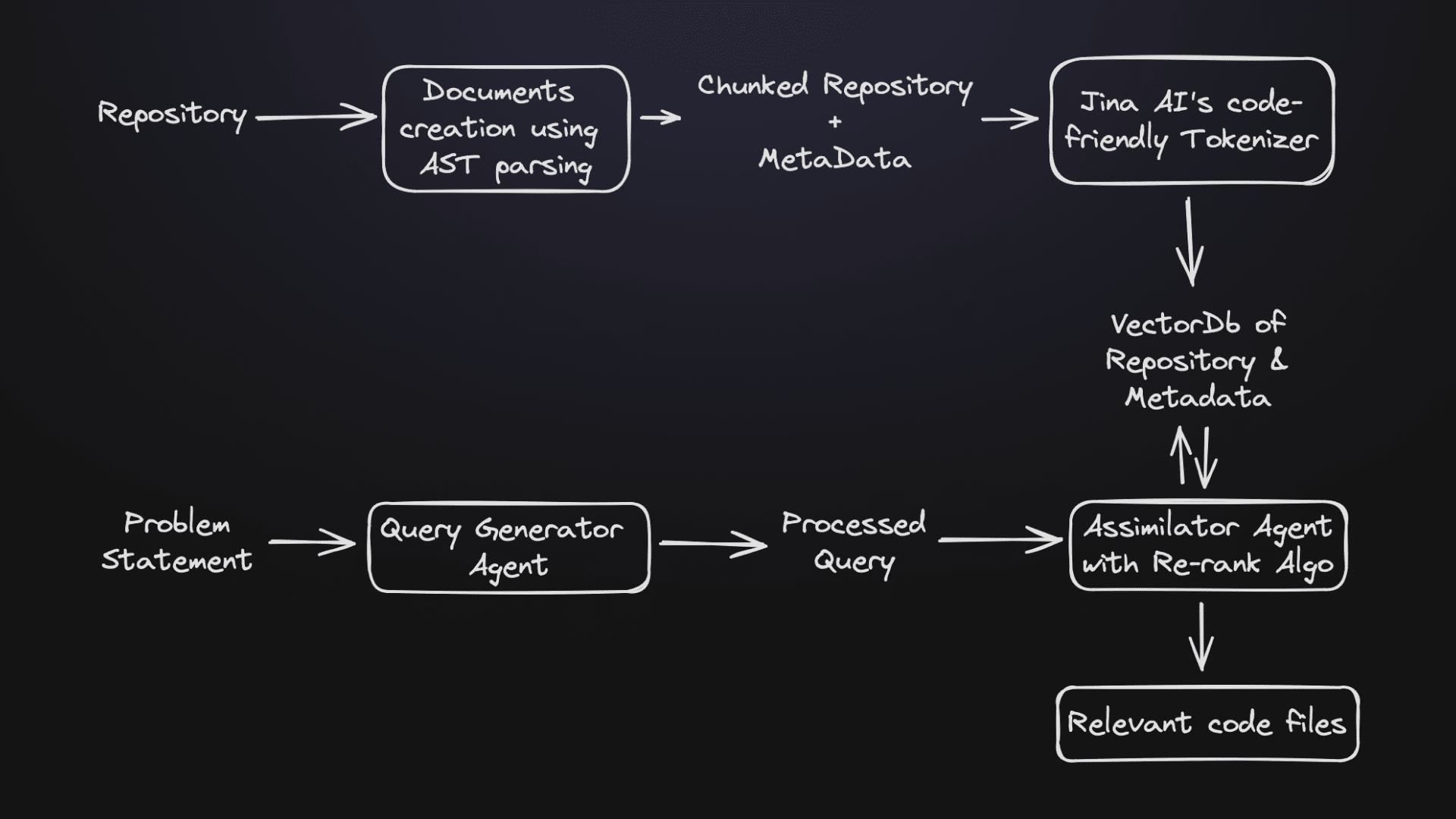

Code Search

In an ideal scenario, if the entire codebase could fit into the context window of a Large Language Model (LLM), it would significantly simplify the problem-solving process. However, LLMs have limitations on context window and even if the context window is large enough, the performance of LLMs suffers when there is excess irrelevant data in the context window. The challenge, therefore, is to enable the agent to navigate the codebase effectively and identify the relevant locations where changes need to be made to resolve the issue—these locations are henceforth referred to as buggy locations.

To address this challenge, we employed a two-tiered approach: an initial Retrieval-Augmented Generation (RAG) system followed by an agent-based system. This approach aims to reduce the search space for the agent, making the problem more tractable.

1. Initial Codebase Navigation: For each problem, we navigate the codebase and extract each method definition and class definition using the Abstract Syntax Tree (AST) module in Python. This extraction process involves creating documents enriched with metadata such as:

- File name

- Start and end lines of the method

- Parent class (if it’s a method)

- Methods or functions invoked by this method

- Signature

- Call graph

- Docstring

This detailed metadata is collected for every class, method, and function in the codebase.

2. Storing and Searching Metadata: Each of these documents is then stored in a vector database using the embedding model available at Jina AI. This particular embedding model was chosen due to its demonstrated potential for effective code search. Additionally, we incorporated full-text search capabilities to fetch any relevant documents directly mentioned in the problem statement. The results from these searches are combined into a hybrid search, and a reranker is employed to prioritize the candidate solutions, which in this case are the relevant files.

3. Query Generation: Given the problem statement, the QueryGeneratorAgent analyzes the problem for any files or methods explicitly mentioned and formulates refined queries to search through the vector database. Using these RAG-based queries, we fetch relevant and unique file names. This step results in a candidate set of files where the potential solution is likely to be found.

4. Reducing the Search Space: To further narrow down the search space and locate the most relevant files, we utilize a “file schema.” The file schema includes the entire class signature, method signatures, docstrings, function signatures, and import statements. This comprehensive schema is passed to an AssimilatorAgent which identifies the probable files by comparing the problem statement with the candidate file schemas. Importantly, all candidate file schemas can fit within the given input context window size of the LLM.

The AssimilatorAgent then returns the Top-K relevant files, where K is a hyperparameter. These Top-K files represent the most probable locations where the solution is likely to be found. With these relevant files identified, we proceed to the next stage: Code Generation.

By systematically narrowing down the search space through these meticulously designed steps, we enhance the efficiency and accuracy of locating the buggy locations within the codebase. This structured approach ensures that the agent can focus on the most relevant parts of the code, setting the stage for effective code generation and problem resolution.

SuperCoder’s code search process

Code Generation

In code generation, identifying and addressing buggy locations within the codebase is a critical step. This process begins with pinpointing the ‘k’ most likely and relevant files associated with the problem statement. To accomplish this, we employ a PlannerAgent, a sophisticated tool designed to navigate through the code files, identify buggy locations, and determine the necessary changes required to rectify the issues. These buggy locations can be situated within methods, functions, or even at the top-level code. In some instances, the PlannerAgent may need to insert an entirely new method to resolve the problem or at least propose a viable solution.

When it comes to code generation in Python, one of the significant challenges is handling indentation issues, which can complicate the insertion of new code. To mitigate this problem, we opted to replace the entire method or function body rather than attempting to insert code piecemeal. The CodeGenerationAgent is tasked with regenerating the entire method, as the method definition is unlikely to exceed the 4k output tokens limitation—a limitation we have not encountered thus far. However, we are actively seeking more efficient workarounds for this limitation. Once the code is generated, we utilize the Abstract Syntax Tree (AST) module to replace the method body seamlessly.

We have devised distinct mechanisms to insert code for pre-existing methods, nested methods, functions, and top-level code, though this aspect requires further exploration and refinement. After generating the code, we execute the corresponding test cases. If any test cases fail, the execution feedback is propagated back to the code generator and subsequently to the PlannerAgent. Each time the generated solution fails to pass the held-out tests, the entire setup is rerun, ensuring a continuous loop of improvement and refinement.

In summary, our approach to code generation involves a meticulous process of identifying buggy locations, regenerating entire method bodies to avoid indentation issues, and leveraging the AST module for seamless code replacement. This method is complemented by a robust feedback loop that ensures continuous improvement and accuracy in code generations

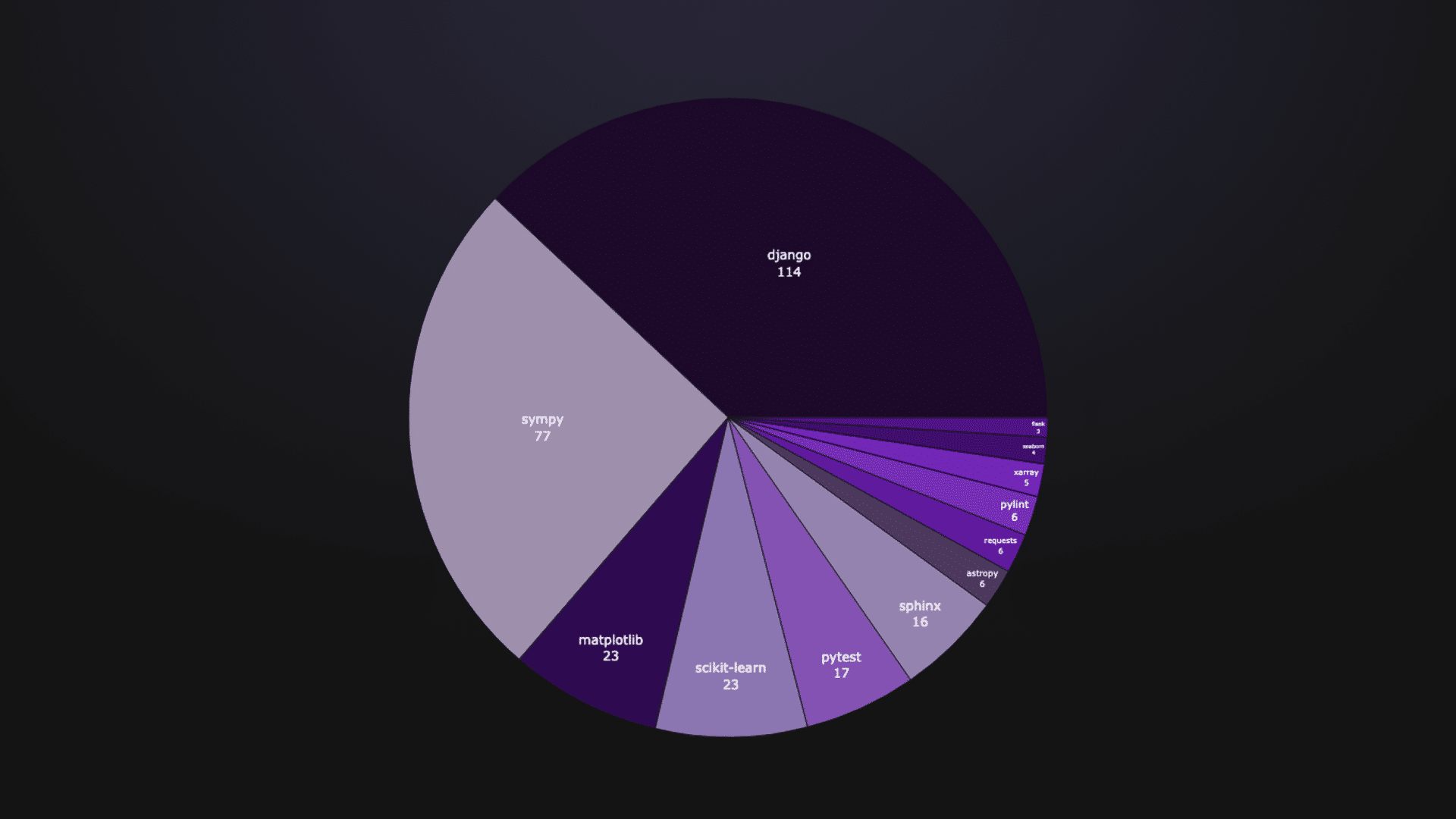

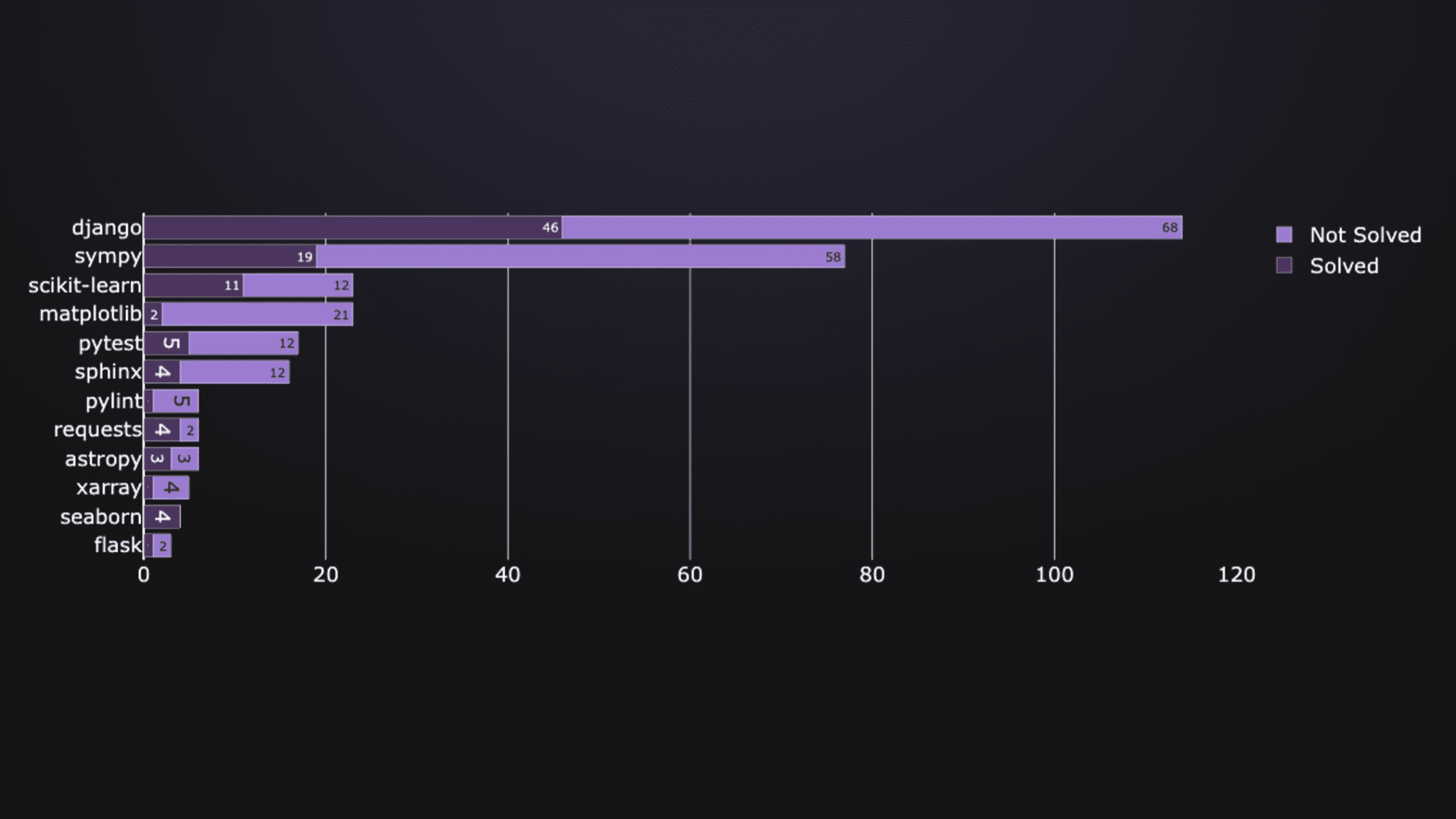

Framework-wise distribution of tests in SWE Lite

While designing the system, we saw that Sonnet performed marginally better than GPT-4o on code generation on multiple occasions. But for planning and filtering top-k files GPT-4o was better. We were able to solve 101 instances out of 300 of which the detailed analysis is given below :

Framework-wise distribution of tests in SWE Lite

The SWE-Bench Lite datasets is made up of 300 data instances across 12 repositories. The instances are divided amongst the above repos. Django makes the largest contribution to the dataset with 114 instances (38%). Flask has the least amount of data, with only 3 instances (1%).

SuperCoder’s performance on SWE-Bench Lite:

SuperCoder’s performance on SWE-Bench Lite

Out of the 300 instances available across the dataset, SuperCoder2.0 managed to solve 101 data instances achieving 34%. This shows the ability of our approach to solve coding problems across diverse repositories. The idea of using two two-tiered code search approach proves to be successful. It reduces the search space by, locating the top K files which are relevant to the given problem statement using the RAG system, and identifying the exact file where the edit is to be made using an agentic approach. This helped in achieving 34% solved cases across the repositories. The proper file location plays a major role in solving the given problems.

Successful patch generation across repositories:

Successful patch generation across repositories

The distribution of the 101 solved instances is shown in the figure above. The major number of instances that were solved belongs to Django (46 instances) making it to be 45.5% of the solved instances. SuperCoder2.0 managed to resolve all the instances of Seaborn due to its highly efficient agentic approach to code localization and generation.

Conclusion and next steps

We have used RAG-based flow along with file schema to locate the correct file, this has shown a good potential, but further file and method localization can be improved. Several other works in the same domain have used Repo Map to locate correct locations, which can be incorporated. Apart from these, we identified a few bottlenecks that we are working on:

- In some cases where the problem statement doesn’t give a proper indication of what the buggy location is and where it is, identifying that will be the next big step.

- Not every real-world problem will be like how the problem statement is given in the benchmarking dataset, so the autonomous AI developer has to account for carefully clarifying questions either through the help of a human developer or a proxy agent acting as a human developer.

- Several works have made use of test cases to effectively localize the faulty locations but using them depends on the availability of the test cases.

This is a great starting point from where we and others can start their journey and solve this exciting problem.