Introduction

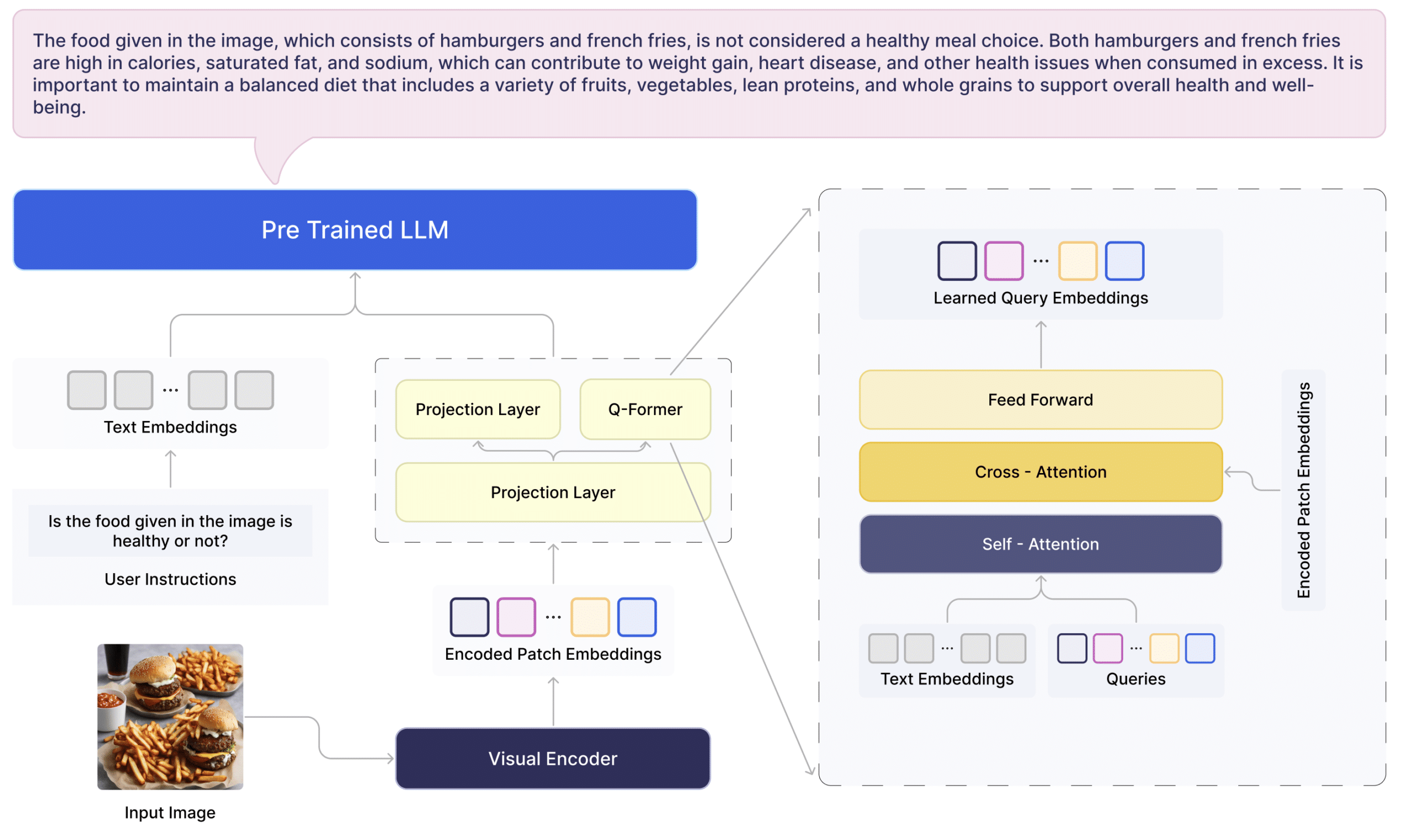

VEagle significantly improves the textual understanding & interpretation of images. The unique feature of VEagle is in its architectural change along with a combination of different components: a vision abstractor from mPlugOwl, Q-Former from InstructBLIP, and the Mistral language model. This combination allows VEagle to better understand and interpret the connection between text and images achieving state-of-the-art results. VEagle starts with a pre-trained vision encoder and language model and is trained in two stages. This method helps the model effectively use information from images and text together.

Our tests, conducted using standard Visual Question Answering (VQA) benchmarks, show that VEagle performs better than existing models in understanding images. The success of VEagle can be attributed to its architecture and the careful selection of datasets for training. This makes VEagle a significant step forward in multimodal AI research, offering a more accurate and efficient way to interpret combined text and image data.

Key Insights

- VEagle has surpassed most state-of-the-art (SOTA) models in major benchmarks, capable of outperforming competitors in various tasks and domains.

- Using an optimized dataset, VEagle achieves high accuracy and efficiency. This demonstrates the model’s effective learning from limited data. We meticulously curated a dataset of 3.5 million examples, specifically tailored to enhance visual representation learning.

- VEagle’s architecture is a unique blend of components, including a visionary abstractor inspired by mPlugOwl, the Q-Former module from InstructBLIP, and the powerful Mistral language model. This innovative architecture, complemented by an additional projectional layer and architectural refinements, empowers VEagle to excel in multimodal tasks.

Results

Our experimental results demonstrate the effectiveness of our approach, with significantly improved performance across various metrics.

Baseline vs Proposed Protocol

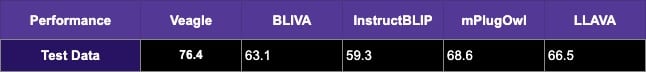

We benchmarked VEagle against four advanced models: BLIVA, instructBLIP, mPlugOwl, and LLAVA. Each model was tested with an image and a related question, and their responses were assessed for accuracy using GPT-4. Our model showed a significant improvement in accuracy over these baselines, as detailed in Table.

In-House Test Dataset

To evaluate VEagle’s adaptability and general performance, we employed an in-house test dataset comprising various tasks including captioning, OCR, and different types of visual question-answering. This dataset was novel to our model, ensuring an unbiased evaluation. The results, as shown in Table 2, indicate the promising performance of VEagle across all task types.

Qualitative Analysis

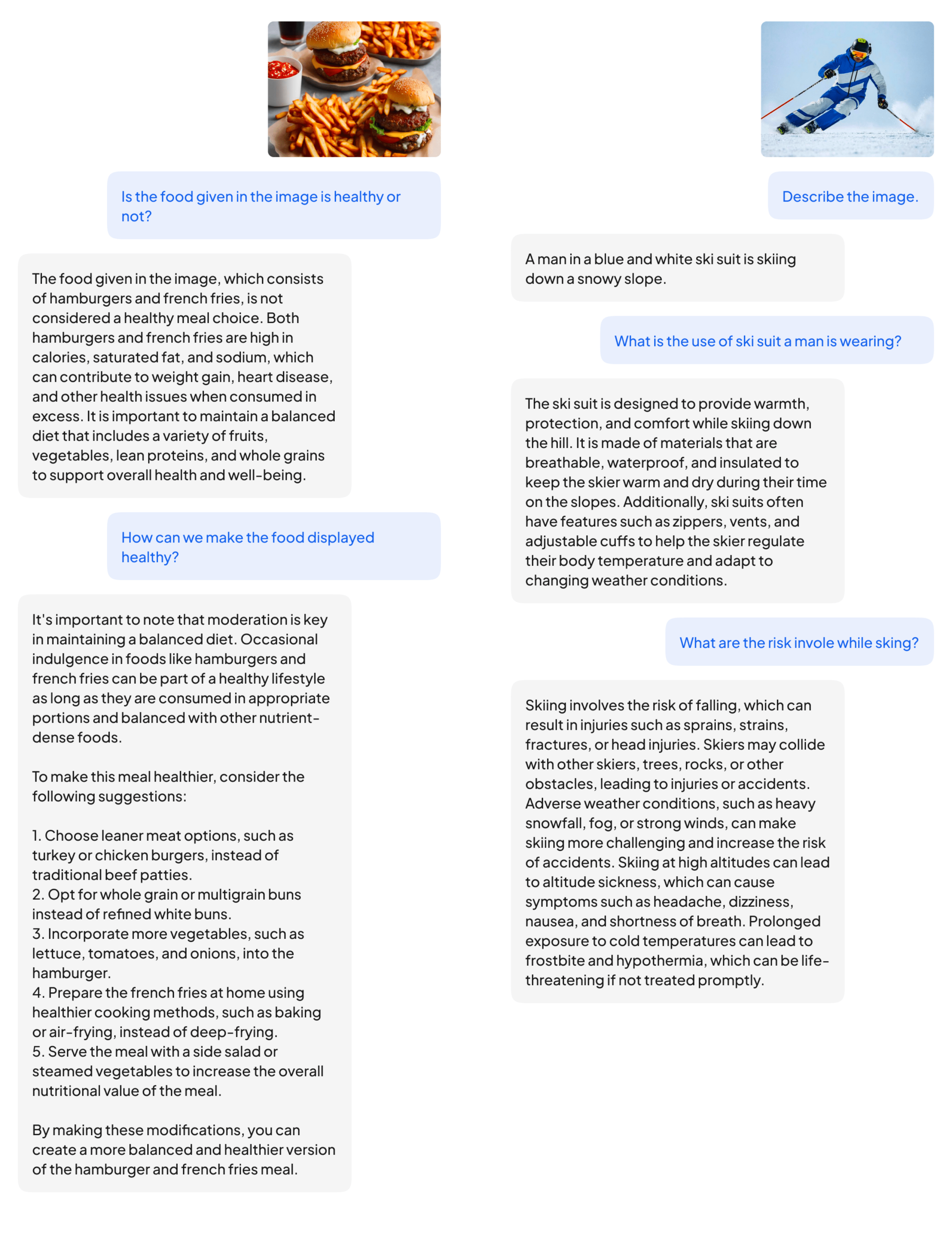

We also conducted a qualitative analysis using a set of complex and challenging tasks. This analysis aimed to assess VEagle’s performance in intricate scenarios beyond quantitative metrics. The results, illustrated in Figure 5, demonstrate the model’s effectiveness in handling these tasks.

Model Architecture

Encoding Visual representation

The VEagle model incorporates a crucial image encoder, the ViT-L/14 from mPlugOwl, for extracting meaningful visual representations. This encoder is efficient at understanding image semantics and context, vital for accurate predictions and relevant outputs. mPlugOwl’s unique training approach combines a trainable visual encoder with a frozen pre-trained language model. This method captures both low-level details and higher semantic information in images, aligning it effectively with linguistic data. The model’s performance is maintained while utilizing diverse image-caption pairs from datasets like LAION-400M, COYO-700M, Conceptual Captions, and MSCOCO.

Integrating Visual and Textual Data

VEagle represents a leap in multimodal representation learning. It combines visual inputs with textual data, ensuring a more holistic understanding of content. This integration allows VEagle to process and interpret both data forms synergistically, leading to enhanced AI performance in tasks requiring a nuanced understanding of visual and textual elements.

Dynamic Encoding and Projection

A cornerstone of VEagle’s architecture is its dynamic encoding mechanism, which goes beyond traditional projection layers. The model encodes visual data, aligning it closely with textual representations. However, to overcome the limitations of projection layers in capturing all the necessary information for the Large Language Model (LLM), we have introduced a multi-layer perceptron along with Q-Former. This innovative addition further refines VEagle’s ability to understand and correlate complex visual-textual relationships, enhancing its capacity to process and interpret multimodal data effectively.

Leveraging Advanced Language Models

At the heart of VEagle’s prowess is its utilization of cutting-edge language models. These models play a crucial role in interpreting textual data, providing a rich semantic understanding that complements the visual data inputs. This integration is key to VVagle’s enhanced performance in complex multimodal tasks, setting it apart from existing models. We chose Mistral 7B as our Language Model and we could see a significant performance boost.

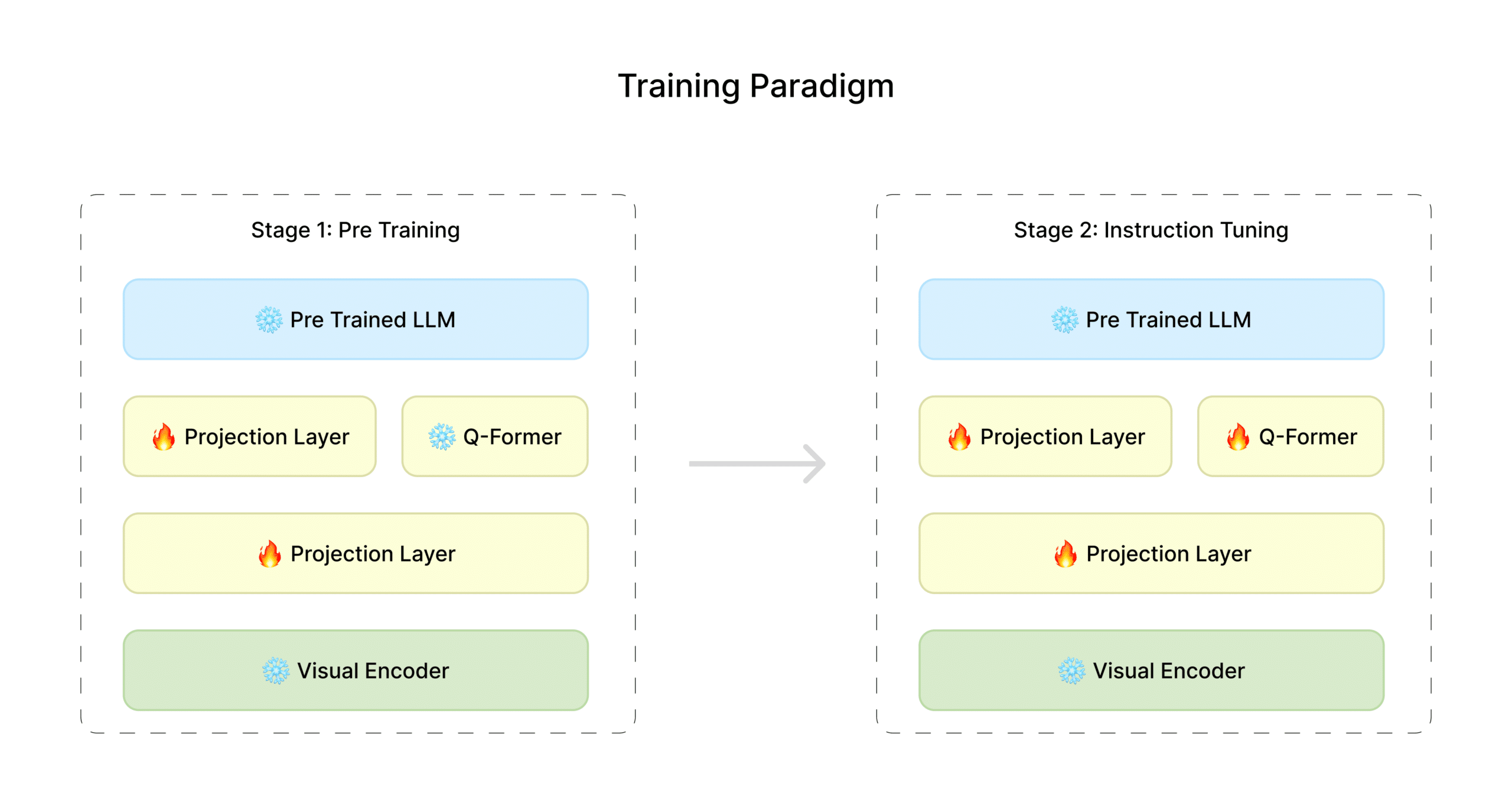

Training Process

VEagle’s training involves a two-stage process: pre-training and fine-tuning. Using specific datasets tailored for multimodal tasks, this approach enables VEagle to learn a wide range of representations, adapting to various tasks effectively. The training methodology is meticulously designed to maximize the model’s learning potential, ensuring robust performance across diverse multimodal applications.

Training

- Trained by: SuperAGI Team

- Hardware: NVIDIA 8 x A100 SxM (80GB)

- LLM: Mistral 7B

- Vision Encoder: mPLUG-OWL2

- Duration of pretraining: 12 hours

- Duration of finetuning: 25 hours

- Number of epochs in pretraining: 3

- Number of epochs in finetuning: 2

- Batch size in pretraining: 8

- Batch size in finetuning: 10

- Learning Rate: 1e-5

- Weight Decay: 0.05

- Optmizer: AdamW

Datasets

VEagle’s training approach is marked by innovative dataset enhancement techniques. To address single-word answer datasets, the team employed GPT-4 and Mixtral, transforming concise responses into detailed, nuanced answers. This method significantly improved the model’s comprehension capabilities across various query types. Additionally, the team tackled the issue of question repetition in datasets. By generating diverse and distinct questions, they enhanced dataset variety, which helped in reducing redundancy. This proactive approach not only enriched the training material but also bolstered the model’s ability to generalize and perform effectively across diverse queries. The meticulous process of dataset augmentation and refinement was instrumental in optimizing VEagle’s performance and reliability.

Steps to try VEagle

1. Clone the repository

source venv/bin/activatechmod +x install.sh./install.shpython evaluate.py --answer_qs \ --model_name veagle_mistral \ --img_path images/food.jpeg \ --question "Is the food given in the image is healthy or not?"

Conclusion

The VEagle multi-modal model stands out as a formidable contender, consistently outperforming established benchmarks in diverse domains. Through the strategic fusion of various modules curated from extensive research, VEagle showcases remarkable performance, not only meeting but exceeding the expectations set by existing models. However, our work also reveals areas that still require refinement, emphasizing the ongoing nature of the pursuit of perfection. This acknowledgment underscores the need for further exploration and optimization, recognizing that the path to excellence in multi-modal models like VEagle continues to unfold. As we navigate this landscape,

Qualitative & quantitative results produced by our VEagle model showcase a spectrum of its diverse capabilities. These demonstrations include intricate visual scene understanding and reasoning, multi-turn visual conversation, and more.

VEagle remains a promising catalyst for future advancements in Vision-Language Models, beckoning further investigation and innovation in this dynamic field

To explore the technical aspects and code implementation of VEagle, you can visit the VEagle repository on GitHub at https://github.com/superagi/Veagle