Software ate the world, AI ate the software and now agents are eating AI

Agents, arguably are the early design pattern of what could eventually evolve into the holy grail of AGI.

But the reality is, agents are cool, but they are pretty crappy at doing things reliably in the real world. It is like the early days of 1998 of the internet, where the power of interconnected information networks was a step change from anything that was out there, but it sucked to do anything useful.

One of the most common pain points in the development of autonomous AI agents is achieving efficient agent trajectory fine-tuning or how you simply make the agents learn from past runs.

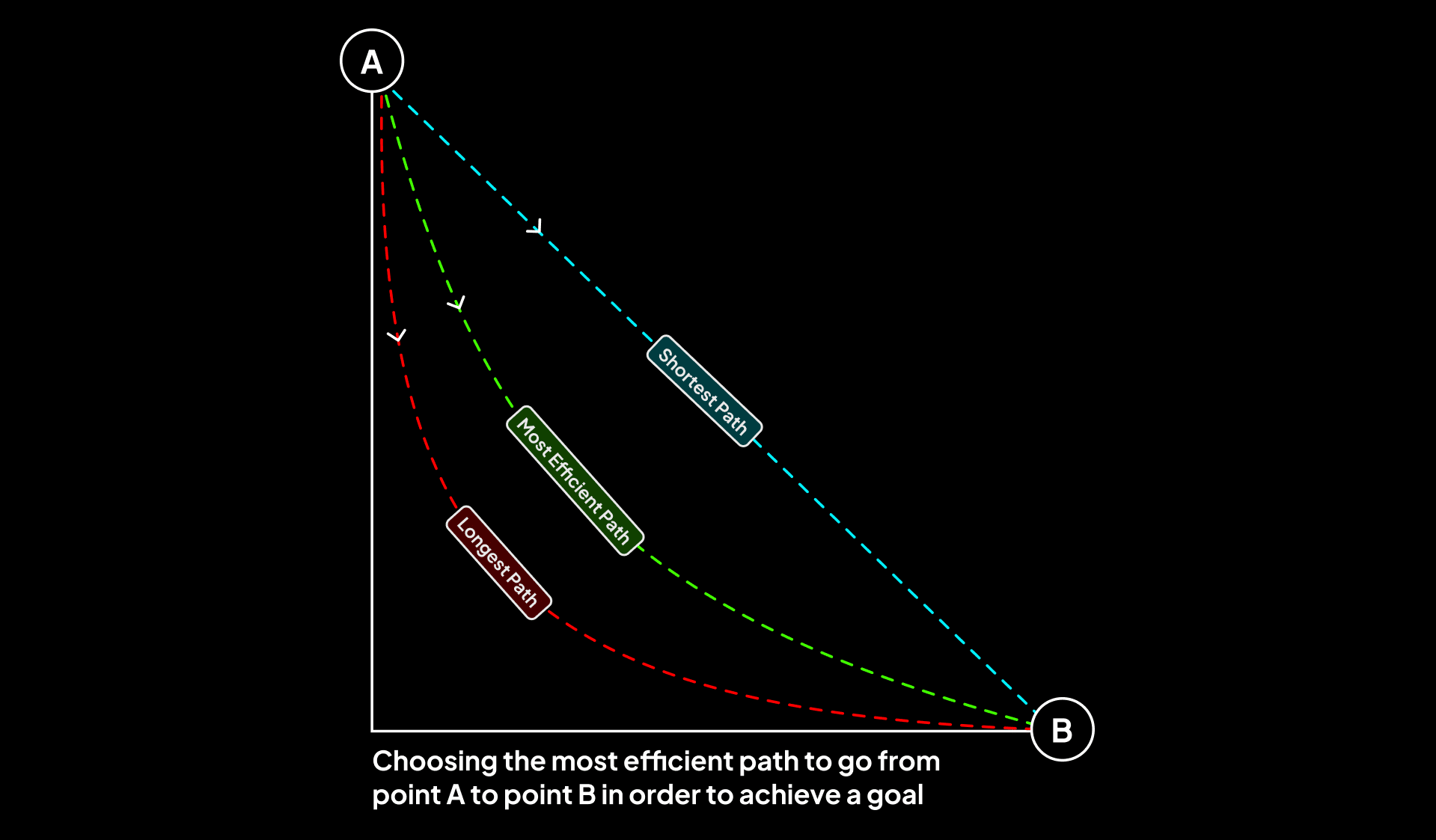

Typically, for each attempt to achieve a goal, AI agents operate as if it is starting from scratch—not using the learnings from the previous runs and following a relatively random path to go from point A to Point B.

This causes issues when you need more reliability to achieve objectives. Degrees of freedom that make agents powerful also turn out to be their Achilles heel to cross the chasm from cool toy technology to real production deployment.

SuperAGI is addressing this by adding a notion of ‘Agent Instructions’. Think about the agent’s goal as going from point A to point B, then instructions are like asking for directions about how to go there.

What’re Agent Instructions?

What’re Agent Instructions?

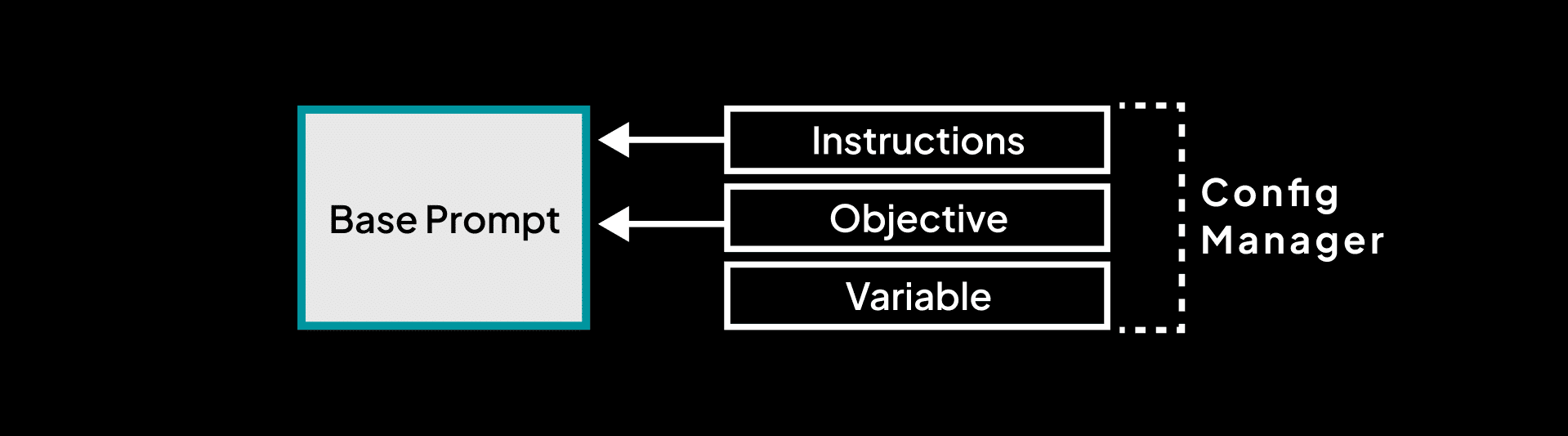

Essentially, Agent Instructions are a set of directives appended into an agent during its provisioning phase.

Acting as a guidebook for the agent, these instructions help the agent achieve its objective with a higher degree of efficacy, reducing the need for ‘first principles’ thinking for every run and also helping it avoid the dreaded ‘Agent Loops’

These instructions append to the agent’s base prompt via ‘config manager’, allowing them to be reusable across subsequent runs.

We have enabled Agent Instructions to be a core part of Agent initialization phase as part of Config manager in SuperAGI. You can add multiple instructions for a particular goal and define the instruction temperature – or how explicitly you want an agent to follow the instructions. Higher the temperature – it would take instructions as just guiding direction, but lower the Agent instruction temperature and it would try to follow instructions with the least deviation.

Recursive Trajectory Fine-Tuning

Recursive Trajectory Fine-Tuning

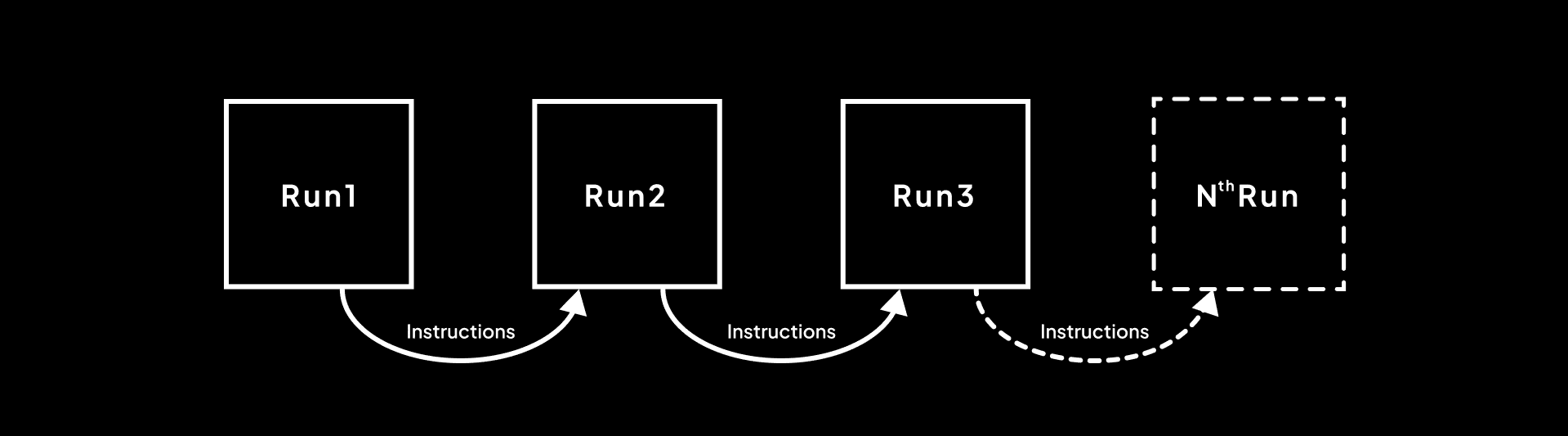

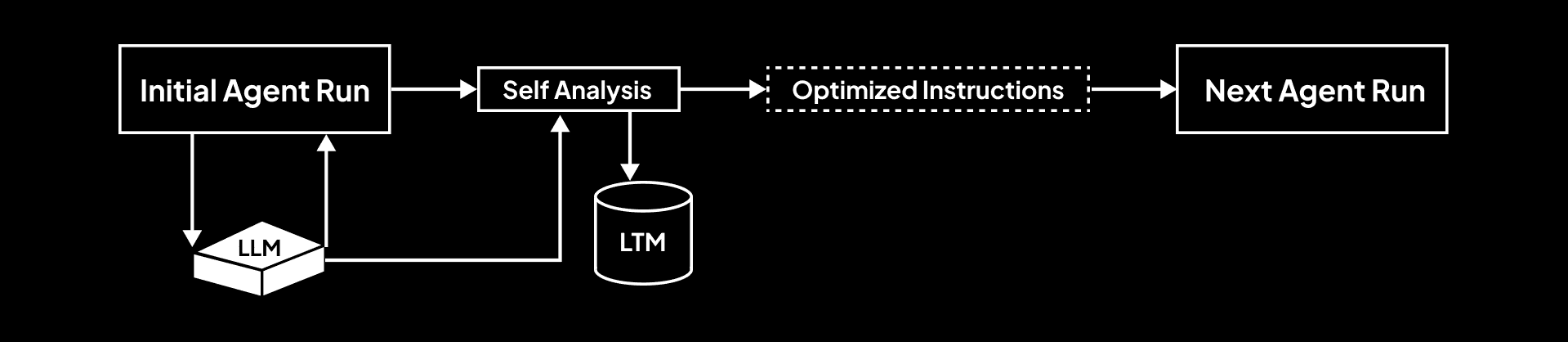

Looking forward, the proposed V2 of Agent Instructions in SuperAGI aims to utilize Language Models (LLMs) to bring out a completely autonomous, self-optimizing process of recursive agent trajectory fine-tuning.

Post-execution, the agent would perform a self-analysis, debugging its path trajectory and identifying areas of potential improvement. It then compiles an optimized instruction set for the next run, essentially creating a self-improvement recursive loop for trajectory fine-tuning.

This automated instruction set generation feeds back into the input for the next run, forming a self-improvement loop. You can bootstrap the initial run by giving feedback at every step and once it has tuned its ideal trajectory, you can let go of the bootstraps for subsequent runs.